Server Monitoring Made Simple: Stop Downtime Before It Happens

🎙️ Dive Deeper with Our Podcast!

Infrastructure Vigilance: Mastering Proactive Monitoring

Every minute your servers are down costs your business money, productivity, and customer trust. For small and medium-sized businesses across Orange County and Southern California, unplanned downtime isn’t just an inconvenience—it’s a threat to survival. Studies show that the average cost of IT downtime ranges from $5,600 to $9,000 per minute, depending on your industry and business size.

The good news? Most server failures don’t happen without warning. With proper server monitoring tools and proactive IT support, you can detect problems before they cascade into full-blown outages. This comprehensive guide will show you how to implement effective IT infrastructure management that keeps your systems running smoothly while you focus on growing your business.

Understanding the True Cost of Server Downtime

Before diving into monitoring solutions, let’s examine what’s really at stake when your servers go offline.

Financial Impact Beyond the Obvious

When servers crash, the financial bleeding starts immediately. Just the tip of the iceberg is lost sales. Your employees sit idle, unable to access the tools they need to work. Customer service grinds to a halt. Ongoing transactions get abandoned. For e-commerce businesses, even a few minutes of downtime during peak hours can translate to thousands of dollars in lost revenue.

Recovery costs add another layer of expense. Emergency IT support comes at premium rates. Data recovery efforts consume hours or days of skilled labor. In severe cases, you might need to restore from backups or rebuild entire systems from scratch.

Reputation Damage That Lasts

Building customer trust takes years, but destroying it simply takes a few seconds. When your services become unavailable, customers don’t just wait patiently—they turn to competitors who can serve them immediately. Social media amplifies every outage, turning a technical issue into a public relations crisis.

Business partners and vendors lose confidence in your reliability. If you can’t keep your own systems running, how can they trust you to deliver on commitments? This erosion of trust affects contract renewals, partnership opportunities, and your ability to attract premium clients.

Compliance and Legal Ramifications

For businesses handling sensitive data or operating in regulated industries, downtime can trigger compliance violations. Healthcare organizations must maintain HIPAA-compliant systems. Financial services face strict uptime requirements. Even a brief outage during an audit period can result in penalties, mandatory remediation costs, and increased scrutiny from regulators.

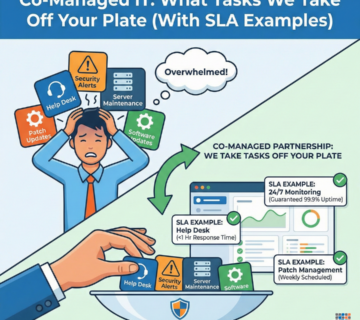

The Shift from Reactive to Proactive IT Support

Traditional IT support operates on a break-fix model: something breaks, you call for help, technicians respond. This reactive approach guarantees downtime will occur before anyone takes action. Modern IT infrastructure management flips this script entirely.

Why Reactive Support Falls Short

When you wait for problems to announce themselves through system failures, you’ve already lost control of the situation. Users report issues after they’ve been struggling for minutes or hours. By the time IT staff diagnose the problem, secondary issues may have developed. The pressure to restore service quickly can lead to rushed decisions and incomplete fixes.

Reactive support also creates unpredictable costs. Emergency fixes command premium rates. Downtime during critical business hours multiplies losses. Without visibility into underlying issues, you end up treating symptoms rather than root causes, leading to recurring problems that drain resources over time.

The Proactive Advantage

Proactive IT support fundamentally changes the game. Instead of waiting for failures, server monitoring tools continuously track system health, performance metrics, and emerging issues. This constant vigilance allows IT teams to spot warning signs—rising temperatures, increasing error rates, degrading performance—and intervene before problems affect operations.

Consider a scenario where monitoring detects a hard drive with increasing bad sectors. Proactive support replaces that drive during a planned maintenance window, avoiding the data loss and unplanned downtime that would occur if the drive failed catastrophically during business hours. The difference between controlled maintenance and emergency recovery represents thousands of dollars in saved costs and eliminated stress.

Essential Components of Effective Server Monitoring

Comprehensive server monitoring encompasses multiple layers of your IT infrastructure. Understanding these components helps you build a monitoring strategy that catches problems at every level.

Hardware Health Monitoring

Physical server components don’t last forever. Hard drives develop bad sectors. Power supplies degrade. Cooling fans wear out. Memory modules develop errors. Hardware health monitoring tracks the vital signs of physical components, alerting you when metrics fall outside normal ranges.

Temperature sensors reveal cooling system failures before overheating damages components. SMART data from hard drives predicts failures weeks or months in advance. Power supply voltage monitoring catches electrical issues before they cause system instability. By tracking these metrics continuously, you can schedule replacements during maintenance windows rather than experiencing catastrophic failures during peak business hours.

Performance Monitoring and Resource Utilization

Slow performance often signals deeper problems. Performance monitoring tracks CPU usage, memory consumption, disk I/O rates, and network throughput. These metrics reveal whether your servers have adequate resources for current workloads or if upgrades are needed.

Performance trends also expose inefficient applications, poorly optimized databases, and resource leaks that gradually consume system capacity. When monitoring shows memory usage climbing steadily over days or weeks, you can investigate and resolve the issue before the server runs out of resources and crashes.

Network Connectivity and Bandwidth Monitoring

Your servers exist within a complex network environment. Network monitoring ensures data flows smoothly between servers, storage systems, and user devices. It tracks bandwidth utilization, packet loss, latency, and connection quality.

Network issues often manifest as intermittent problems that traditional troubleshooting struggles to diagnose. Monitoring captures these transient issues, providing the data needed to identify flaky network equipment, congested links, or configuration problems that impact reliability.

Application and Service Monitoring

Infrastructure monitoring tells you if servers are running, but application monitoring reveals whether they’re actually working correctly. Service monitoring ensures that business-essential services continue to be available, databases accept queries, web servers supply information, and critical applications respond appropriately. This layer catches problems that hardware and network monitoring might miss. A database server might appear healthy from an infrastructure perspective while the database itself struggles with locked queries or corrupted indexes. Application monitoring detects these service-level issues before users encounter them.

Security and Event Monitoring

Server monitoring includes watching for security threats and suspicious activities. Failed login attempts, unusual access patterns, unexpected network connections, and unauthorized configuration changes all represent potential security incidents.

Integrating security monitoring with infrastructure monitoring provides context that helps distinguish real threats from false alarms. When unusual network activity coincides with high CPU usage, you might be looking at a compromised server running malicious code rather than just a busy application.

Choosing the Right Server Monitoring Tools

The market offers countless server monitoring tools, from simple scripts to enterprise platforms costing hundreds of thousands of dollars. Selecting appropriate tools requires understanding your specific needs and constraints.

Open Source vs. Commercial Solutions

Open source monitoring tools like Nagios, Zabbix, and Prometheus offer powerful capabilities without licensing costs. These platforms provide extensive customization options and strong community support. However, they require significant expertise to deploy and maintain. You’ll need staff with specialized skills to configure monitoring, create custom checks, and integrate diverse systems.

Commercial monitoring platforms like SolarWinds, PRTG, and Datadog provide polished interfaces, pre-built integrations, and professional support. They reduce the expertise required for deployment and ongoing management. The trade-off comes in subscription costs that scale with the size of your infrastructure.

For small businesses without dedicated IT staff, managed monitoring services offer the best balance. You gain access to enterprise-grade tools and expert configuration without building internal expertise or managing complex systems.

Cloud-Based vs. On-Premises Monitoring

Cloud-based monitoring platforms offer rapid deployment, automatic updates, and accessibility from anywhere. They eliminate the need to maintain monitoring infrastructure and scale effortlessly as your environment grows. However, they require reliable internet connectivity and may raise concerns about sending monitoring data to external services.

On-premises monitoring keeps all data within your network, addressing security and compliance requirements that restrict cloud usage. It provides monitoring capabilities even if internet connectivity fails. The downside includes maintenance overhead, hardware costs, and the need for internal expertise.

Many organizations adopt hybrid approaches, using on-premises monitoring for critical infrastructure while leveraging cloud platforms for broader visibility and centralized reporting across multiple locations.

Key Features to Prioritize

Regardless of which tools you choose, certain capabilities prove essential for effective monitoring:

Alert customization allows you to define thresholds that match your environment and business priorities. Generic alerts generate too much noise, leading to alert fatigue where real problems get lost in a sea of minor notifications.

Retaining historical data makes capacity planning and trend analysis possible. Understanding how resource usage evolves over weeks and months helps you make informed decisions about upgrades and optimization.

Integration capabilities ensure monitoring tools work together seamlessly. Your monitoring platform should integrate with ticketing systems, communication tools, and automation platforms to streamline incident response.

Mobile access lets IT teams respond to critical alerts regardless of location. When problems occur outside business hours, mobile notifications and remote access capabilities minimize response time.

Implementing a Server Monitoring Strategy

Deploying monitoring tools represents just the first step. A comprehensive strategy ensures monitoring delivers value rather than generating noise.

Start with Critical Systems

Attempting to monitor everything simultaneously leads to overwhelming complexity and delayed value. Begin by identifying your most critical systems—the servers and applications whose failure would immediately impact business operations. Deploy monitoring for these systems first, establishing baselines and refining alerts before expanding coverage.

This focused approach builds expertise and demonstrates value quickly. As your team becomes comfortable with monitoring tools and processes, gradually expand coverage to less critical systems and more detailed metrics.

Establish Meaningful Baselines

Understanding normal behavior is essential for detecting anomalies. Spend time observing your systems under typical workloads, documenting performance patterns, resource utilization, and activity cycles. These baselines inform alert thresholds and help distinguish genuine problems from normal variations.

Baselines should account for business rhythms. Resource usage on Monday morning looks different from Friday afternoon. Month-end processing may legitimately spike CPU and disk usage. Your monitoring strategy should recognize these patterns rather than treating predictable variations as problems.

Design Alert Hierarchies

Not all alerts deserve immediate attention. Creating alert severity levels helps teams prioritize response efforts effectively. Critical alerts indicate imminent or ongoing service impact requiring immediate response. Warning alerts highlight developing issues that need attention but aren’t yet affecting operations. Informational alerts provide awareness without demanding action.

Alert routing should match severity and expertise. Critical database issues might page the database administrator directly, while general server warnings flow to the help desk during business hours and escalate only if unresolved.

Document Response Procedures

Alerts lose effectiveness if recipients don’t know how to respond. For each monitored condition, document the appropriate response procedure. What does high CPU usage mean? Which applications should you check? What steps resolve common issues?

These documented procedures, often called runbooks, transform monitoring alerts into actionable information. They enable less experienced staff to handle routine issues confidently while escalating genuinely complex problems to specialists.

Schedule Regular Reviews

Monitoring configurations require ongoing maintenance. Schedule monthly or quarterly reviews to assess alert effectiveness, adjust thresholds based on changing conditions, and add monitoring for new systems or applications.

These reviews also identify opportunities to improve monitoring coverage. Are there blind spots where problems go undetected? Do certain alerts generate noise without value? Regular refinement keeps your monitoring strategy aligned with business needs.

Common Server Monitoring Pitfalls to Avoid

Even well-intentioned monitoring implementations can fail to deliver expected benefits. Understanding common pitfalls helps you avoid wasting effort on ineffective approaches.

Alert Fatigue from Poor Configuration

When monitoring generates constant alerts for minor issues, teams start ignoring notifications. This alert fatigue proves dangerous because genuine critical alerts get lost in the noise. Recipients become desensitized, assuming most alerts represent false alarms rather than real problems.

Combat alert fatigue by carefully tuning thresholds and alert conditions. Use progressive alerting that escalates only when problems persist or worsen. Implement maintenance windows that suppress alerts during planned activities. Regularly review alerts that generate frequent notifications without leading to corrective action.

Monitoring Without Action Plans

Knowing a problem exists doesn’t help if you lack plans for addressing it. Monitoring without defined response procedures creates confusion and delays during incidents. Teams waste precious time debating what to do rather than executing practiced responses.

Every monitored condition should have a documented response plan. Who investigates? What information do they need? What are the standard troubleshooting steps? When should incidents escalate? Answering these questions before incidents occur enables rapid, effective response.

Insufficient Context for Troubleshooting

Alerts that simply state “server down” or “high CPU usage” provide insufficient context for effective troubleshooting. Monitoring should capture and present relevant context: what changed recently, what other systems are affected, what was happening before the problem started.

Correlation across monitoring data sources reveals patterns invisible when examining metrics in isolation. High CPU usage combined with network saturation and increased database queries tells a different story than high CPU usage alone. Building context into monitoring helps teams understand problems quickly and choose appropriate solutions.

Neglecting Monitoring Infrastructure

Monitoring systems themselves require maintenance and monitoring. A monitoring platform that crashes or loses connectivity fails exactly when you need it most. Ensure monitoring infrastructure receives the same attention to reliability and redundancy that you apply to production systems.

External monitoring that checks availability from outside your network provides an important safety net. If your internal monitoring fails due to network issues or power outages, external checks alert you that problems exist even when internal systems can’t report them.

Advanced Monitoring Capabilities

Basic monitoring catches obvious problems, but advanced capabilities provide deeper insights and more sophisticated problem prevention.

Predictive Analytics and Trend Analysis

Machine learning algorithms can analyze historical monitoring data to predict future problems. By identifying patterns that precede failures, predictive analytics enables proactive intervention before issues impact operations.

Trend analysis reveals gradual changes that might otherwise go unnoticed. Disk space that decreases slowly over months eventually causes problems, but the gradual nature makes it easy to overlook until space runs out. Trend monitoring highlights these slow-moving issues while time remains to address them properly.

Automated Remediation

Some problems have well-understood solutions that don’t require human judgment. Automated remediation allows monitoring systems to take corrective action automatically when specific conditions occur. Restarting a hung service, clearing temporary files when disk space runs low, or failing over to backup systems can happen immediately without waiting for human intervention.

Automation must be implemented carefully with appropriate safeguards. Test automated responses thoroughly and implement logging to track automated actions. Start with low-risk remediation tasks and expand gradually as you gain confidence in automation reliability.

Synthetic Monitoring and User Experience Tracking

Traditional monitoring checks system health from a technical perspective, but users care about whether applications work correctly from their viewpoint. Synthetic monitoring simulates user interactions, continuously testing critical workflows to ensure everything functions as expected.

By regularly executing automated tests that mimic real user actions—logging in, searching for information, completing transactions—synthetic monitoring detects problems that infrastructure metrics might miss. An application might appear healthy from a server perspective while actually timing out or returning errors to users.

Capacity Planning and Forecasting

Monitoring data provides the foundation for effective capacity planning. By analyzing utilization trends, you can predict when systems will exhaust available resources and plan upgrades before capacity constraints impact performance.

Good capacity planning prevents both over-provisioning (wasting money on unnecessary resources) and under-provisioning (allowing performance degradation or outages). Data-driven forecasting takes guesswork out of infrastructure decisions.

Integrating Monitoring with IT Service Management

Server monitoring delivers maximum value when integrated with broader IT service management processes.

Incident Management Integration

When monitoring detects problems, incidents should be created automatically in your ticketing system. This integration ensures nothing falls through cracks and provides a complete record of issues and responses. Tickets capture investigation findings, resolution steps, and time spent, creating valuable knowledge for future incidents.

Automated ticket creation also enables metrics tracking. How long does problem resolution typically take? Which types of issues occur most frequently? What’s the mean time to resolution for different problem categories? These metrics inform continuous improvement efforts.

Change Management Correlation

Monitoring data becomes more valuable when correlated with change records. If performance degrades after a software update or configuration change, the timing connection helps pinpoint root causes. Integrating monitoring with change management systems provides this correlation automatically.

Before implementing changes, review relevant monitoring data to establish baseline performance. After changes, monitoring confirms whether performance improved, degraded, or remained stable. This objective assessment replaces subjective opinions about change impact.

Problem Management and Root Cause Analysis

While incident management focuses on restoring service quickly, problem management seeks permanent solutions to recurring issues. Monitoring data supports root cause analysis by providing objective evidence about system behavior before, during, and after incidents.

Patterns visible in monitoring data often reveal underlying problems that manifest as various symptoms. Multiple seemingly unrelated incidents might share common root causes identifiable through careful analysis of monitoring metrics.

Building a Culture of Proactive IT Management

Technology alone doesn’t prevent downtime. Effective monitoring requires organizational commitment to proactive management principles.

Executive Buy-In and Resource Allocation

Proactive monitoring requires investment in tools, training, and staff time. Securing executive support requires demonstrating the business value of preventing downtime rather than simply reacting to problems.

Calculate the cost of downtime in your environment and present monitoring as insurance against those losses. Highlight how proactive approaches reduce emergency support costs, extend hardware lifecycles through better maintenance, and improve employee productivity by maintaining system availability.

Training and Skill Development

Monitoring tools provide capabilities, but people must use them effectively. Invest in training that helps staff understand monitoring concepts, use tools proficiently, and interpret data correctly. Cross-train team members so multiple people can respond to different types of alerts.

Regular training on new features and capabilities ensures your team leverages monitoring tools fully. Many organizations use only a fraction of their monitoring platform’s capabilities simply because staff don’t know additional features exist.

Continuous Improvement Mindset

Treat monitoring as an evolving practice rather than a one-time implementation. Review monitoring effectiveness regularly and identify opportunities for improvement. Learn from incidents by asking what additional monitoring might have detected problems earlier or provided better context for troubleshooting.

Encourage team members to suggest monitoring enhancements based on their experience. The people closest to day-to-day operations often identify valuable monitoring opportunities that managers might overlook.

Measuring Monitoring Effectiveness

To justify ongoing investment in monitoring and identify areas for improvement, measure how effectively your monitoring strategy prevents downtime and enables rapid problem resolution.

Key Performance Indicators

Mean time to detection (MTTD) measures how quickly monitoring identifies problems. Lower MTTD means issues get detected closer to when they occur, minimizing impact duration.

Mean time to resolution (MTTR) tracks how long problems take to resolve. While monitoring alone doesn’t resolve issues, effective monitoring reduces MTTR by providing better context and catching problems before they become complex.

Unplanned downtime percentage shows whether proactive monitoring succeeds in preventing outages. Track this metric over time to demonstrate improvement as your monitoring strategy matures.

False positive rate indicates monitoring accuracy. High false positive rates waste time on non-issues and contribute to alert fatigue. Continuously refine monitoring to reduce false positives while maintaining sensitivity to real problems.

Business Impact Metrics

Translate technical metrics into business terms that demonstrate value to leadership. Calculate downtime costs avoided through proactive problem prevention. Track productivity gains from maintaining system availability. Document customer satisfaction improvements resulting from more reliable services.

These business-focused metrics help secure continued support and resources for monitoring initiatives by demonstrating clear return on investment.

Frequently Asked Questions

What’s the difference between server monitoring and network monitoring?

Server monitoring focuses specifically on the health and performance of individual servers—tracking CPU usage, memory consumption, disk space, application performance, and hardware health. Network monitoring examines the connectivity and performance between systems, including bandwidth utilization, packet loss, latency, and network device health. Comprehensive IT infrastructure management requires both types of monitoring working together, since problems in either area can impact business operations.

How quickly can server monitoring tools detect problems?

Detection speed depends on your monitoring configuration and the type of problem. Critical issues like complete server failures can be detected within seconds through heartbeat monitoring. Performance degradation and resource exhaustion might take minutes to detect as monitoring tools compare current metrics against baseline thresholds. Gradual problems like slowly filling disk space or memory leaks might be detected hours or days before they cause actual failures, depending on how aggressively you configure trend monitoring.

Do small businesses really need professional server monitoring?

Absolutely. Small businesses often lack the IT resources to quickly recover from downtime, making prevention even more critical than for enterprises with large IT departments. The cost of professional monitoring represents a fraction of what you’d lose from even a single extended outage. Many small businesses across Orange County have discovered that managed monitoring services cost less than hiring dedicated IT staff while providing 24/7 coverage and expert expertise.

Can monitoring prevent all server downtime?

While monitoring dramatically reduces unplanned downtime, no system can prevent 100% of outages. Monitoring excels at catching gradual failures, resource exhaustion, and performance degradation before they cause complete failures. However, sudden catastrophic failures like instantaneous hardware death or natural disasters may still cause brief outages. The goal is preventing the majority of predictable, avoidable downtime while minimizing recovery time for unavoidable incidents.

What happens to monitoring during internet outages?

This depends on your monitoring architecture. Cloud-based monitoring platforms lose connectivity during internet outages but can still monitor internal network components. On-premises monitoring continues functioning even without internet access. For complete coverage, implement hybrid monitoring with both internal systems and external monitoring that checks availability from outside your network. External monitoring alerts you to internet connectivity problems that internal systems can’t report.

How much does professional server monitoring cost?

Monitoring costs vary based on the number of servers, complexity of your environment, and level of service required. Basic monitoring for a handful of servers might cost a few hundred dollars monthly, while comprehensive monitoring for complex environments runs higher. However, considering that a single hour of downtime can cost thousands or tens of thousands of dollars, monitoring represents excellent value. Many managed IT providers include monitoring as part of comprehensive service packages rather than charging separately.

Will monitoring slow down my servers?

Modern monitoring tools are designed for minimal performance impact. Monitoring agents typically consume less than 1% of server resources under normal conditions. The slight overhead is far outweighed by the benefits of preventing performance problems and downtime. Poorly configured monitoring or legacy tools might have higher impact, which is why working with experienced IT professionals ensures monitoring enhances rather than hinders performance.

Can I monitor cloud servers the same way as physical servers?

Yes, but with some differences in approach. Cloud servers can be monitored using the same metrics and tools as physical servers—CPU, memory, disk, network, and application performance. However, cloud environments also benefit from monitoring cloud-specific metrics like API rate limits, auto-scaling behavior, and cloud service health. Many organizations use specialized cloud monitoring tools that integrate with AWS, Azure, or Google Cloud platforms while using traditional tools for on-premises systems.

What should I do when I receive a monitoring alert?

Follow your documented response procedures for that specific alert type. Start by assessing severity—is this an immediate threat to operations or a developing issue? Gather relevant context from monitoring dashboards to understand what’s happening. Check if the problem persists or was transient. Execute appropriate troubleshooting steps based on your runbooks. Document your findings and actions in your ticketing system. If unable to resolve quickly, escalate to appropriate specialists according to your incident response plan.

How often should monitoring configurations be updated?

Review monitoring configurations whenever you make significant infrastructure changes—adding new servers, deploying major applications, or changing network architecture. Conduct broader monitoring reviews quarterly to assess alert effectiveness, adjust thresholds based on evolved baselines, and identify coverage gaps. After major incidents, review whether additional monitoring might have detected the problem earlier. This regular maintenance ensures monitoring remains aligned with your evolving IT environment and business needs.

How Technijian Can Help

At Technijian, we understand that Southern California businesses need IT infrastructure that works reliably without constant attention. Our comprehensive managed monitoring services combine enterprise-grade server monitoring tools with 24/7 expert oversight, protecting your business from costly downtime while you focus on growth.

Our proactive IT support approach monitors every aspect of your IT infrastructure—servers, networks, applications, and security systems—detecting problems before they impact your operations. When our monitoring systems identify developing issues, our experienced technicians intervene immediately, resolving problems during off-hours whenever possible to eliminate disruption to your business day.

We customize monitoring strategies for each client’s unique environment and business priorities. Whether you operate a handful of servers or manage complex multi-location infrastructure, our scalable monitoring solutions grow with your business. Our team handles all aspects of monitoring implementation, configuration, and ongoing maintenance, eliminating the need for you to build internal expertise or manage complex monitoring platforms.

Beyond monitoring, Technijian provides comprehensive IT infrastructure management including cybersecurity protection, cloud services, backup and disaster recovery, and Microsoft 365 optimization. Our holistic approach ensures all aspects of your technology work together seamlessly, supporting your business objectives rather than creating obstacles.

With two decades of experience serving Orange County and Southern California businesses, we understand the unique challenges faced by growing companies in our region. Our local team provides responsive, personalized service backed by enterprise-level capabilities typically available only to much larger organizations.

Ready to stop worrying about server downtime? Schedule a free infrastructure audit with Technijian today. We’ll assess your current monitoring capabilities, identify gaps in coverage, and develop a customized plan to protect your business from costly outages. Contact us to discover how proactive monitoring can transform your IT from a source of stress into a competitive advantage.

About Technijian

Technijian is a leading managed IT services provider serving businesses throughout Orange County and Southern California since 2000. Founded by Ravi Jain, our company has grown from a small technology consulting practice into a comprehensive IT solutions partner trusted by hundreds of local businesses.

We specialize in protecting small and medium-sized businesses from technology risks while enabling growth through strategic IT infrastructure. Our services include cybersecurity, cloud computing, managed monitoring, Microsoft 365 management, backup and disaster recovery, and AI workflow automation. Every solution we implement focuses on improving reliability, enhancing security, and reducing total cost of ownership.

Our team of certified technicians and engineers brings decades of combined experience to every client engagement. We stay current with rapidly evolving technology trends and threats, ensuring our clients benefit from the latest capabilities without the complexity of managing them independently.

Based in Irvine, California, Technijian maintains strong roots in the local business community. We understand the unique challenges facing growing companies in Southern California and provide personalized service that larger national providers simply cannot match. When you work with Technijian, you gain a technology partner committed to your success, not just a vendor selling services.