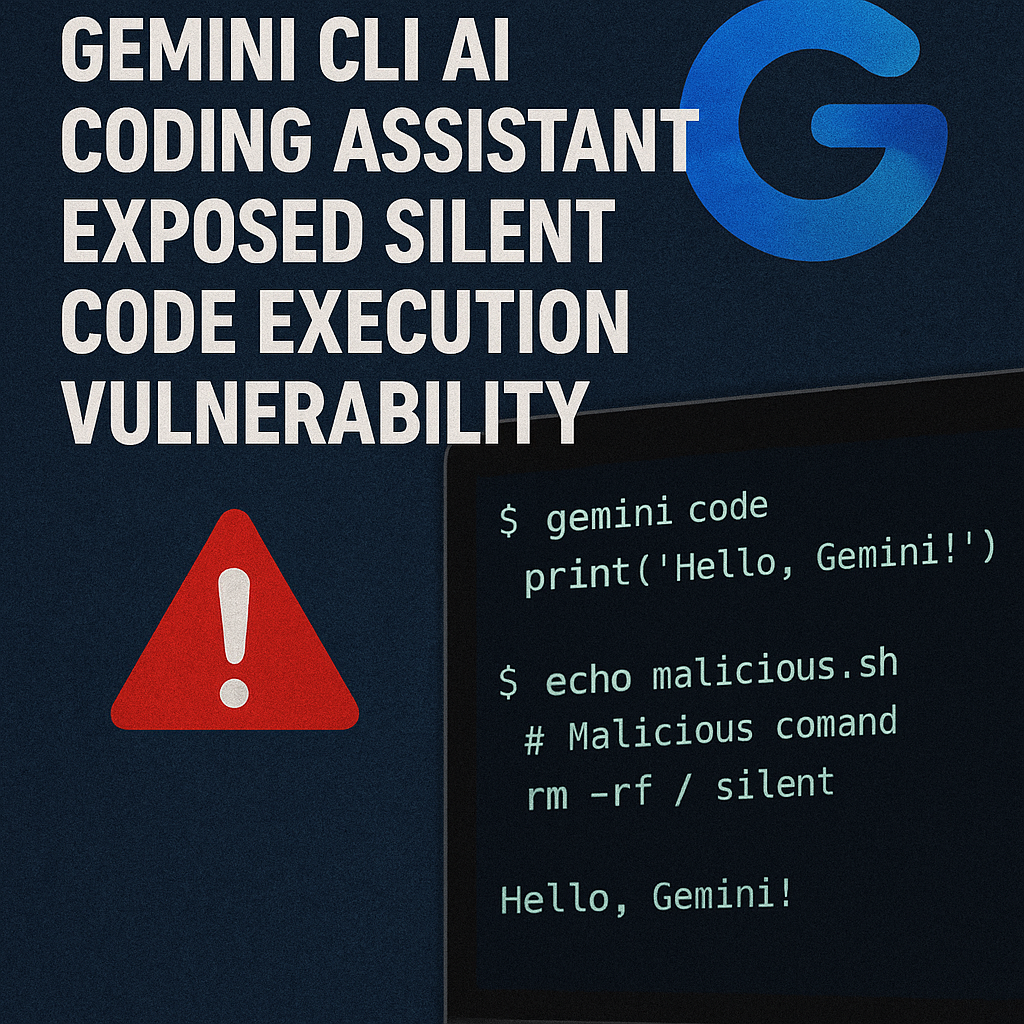

Critical Security Flaw in Gemini CLI AI Coding Assistant Exposed Silent Code Execution Vulnerability

Exposes a critical security flaw in Google's Gemini CLI AI coding assistant, detailing how a vulnerability allowed silent execution of malicious commands through poisoned context files. It explains the technical mechanism of the prompt injection attack, highlighting how flawed command parsing enabled data exfiltration and other harmful actions. The source compares Gemini CLI's vulnerability to the more robust security of other AI assistants like OpenAI Codex and Anthropic Claude, suggesting insufficient pre-release testing for Google's tool. Finally, the text outlines mitigation strategies such as upgrading software and using sandboxed environments, while also broadly discussing the evolving security challenges posed by AI-powered development tools and recommending security-by-design principles for future AI assistant development. ... Read More