Stop Using Personal ChatGPT for Business Data: Why California Small Businesses Need Enterprise AI Security

🎙️ Dive Deeper with Our Podcast!

Quick Summary: California small businesses are unknowingly exposing confidential data by using personal ChatGPT accounts for business operations. In 2025, major data spills revealed how sensitive business information entered into public AI tools became part of training datasets, creating compliance nightmares and competitive vulnerabilities. This comprehensive guide explains why enterprise-grade AI environments with proper data governance aren’t optional anymore—they’re critical for protecting your business. Learn how Technijian’s AI security solutions help Orange County businesses implement safe, compliant AI workflows that protect proprietary data while leveraging AI’s transformative power.

Your Secret Case Strategy Just Became Part of an AI’s Training Data

Imagine this scenario: Your marketing director pastes your Q2 product launch strategy into ChatGPT to “refine the messaging.” Your legal counsel uploads a confidential settlement agreement to get “summary bullet points.” Your HR manager asks the AI to “review this employee termination document for tone.”

Each keystroke just handed your competitive intelligence to a system that may use it to train future models—models your competitors could query tomorrow.

This isn’t a hypothetical nightmare. It’s happening right now across California, and the consequences are only beginning to surface.

The 2025 AI Data Spill Crisis: What California Businesses Need to Know

The Wake-Up Call That Changed Everything

In early 2025, cybersecurity researchers uncovered what industry insiders now call “The Great AI Data Spill”—a systematic revelation that sensitive business data entered into consumer-grade AI platforms was being retained, analyzed, and in some cases, inadvertently exposed through model outputs.

What Actually Happened:

- Medical practices discovered patient intake information appeared in AI-generated responses to unrelated queries

- Law firms found case strategy elements surfacing in publicly accessible AI conversations

- Financial advisors identified client portfolio details leaking through AI training patterns

- Manufacturing companies saw proprietary process documentation replicated in competitor-facing AI outputs

The common thread? Well-meaning employees using personal ChatGPT accounts, Google Bard, or other consumer AI tools for “harmless” business tasks—never realizing those platforms’ default settings allowed their inputs to become permanent parts of massive training datasets.

California’s Regulatory Response

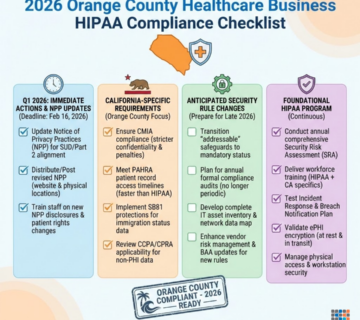

California moved faster than most states, with the California Privacy Rights Act (CPRA) and California Consumer Privacy Act (CCPA) enforcement agencies issuing emergency guidance:

“Businesses that allow employees to input customer data, proprietary information, or confidential business records into non-enterprise AI platforms may be in violation of existing data protection statutes, regardless of employee intent.”

For small businesses in Orange County and throughout Southern California, this created an immediate compliance crisis. The very tools promising to streamline operations now represented existential legal risks.

Why Consumer AI Platforms Create Data Security Nightmares

The Architecture of Vulnerability

Consumer AI platforms like personal ChatGPT accounts, Bing Chat, and similar tools operate under fundamentally different data governance models than enterprise solutions:

Consumer AI Data Flow:

- User Input: Employee enters business data

- Processing: Data analyzed on shared infrastructure

- Storage: Conversation history stored indefinitely (unless manually deleted)

- Training: Data potentially used to improve future model versions

- Cross-Contamination: Information from multiple users potentially influences outputs

- Retention: No guaranteed deletion timelines or verified data destruction

The Hidden Risks:

Intellectual Property Exposure When your product development team asks ChatGPT to “brainstorm improvements for our new software feature,” they’re describing unreleased functionality to a system with no non-disclosure agreement. That innovation description now exists in OpenAI’s data ecosystem, potentially influencing how the model responds to your competitors’ similar queries.

Compliance Violations California businesses handling health information (HIPAA), financial data (GLBA), or personal information (CCPA/CPRA) face regulatory requirements around data processing and storage. Consumer AI platforms typically don’t provide Business Associate Agreements (BAAs) or compliance certifications—making their use with protected data a direct violation.

Competitive Intelligence Leaks Your sales team’s conversation asking AI to “analyze why we lost the last three enterprise deals” just created a permanent record of your competitive weaknesses. While direct quote reproduction is rare, the patterns and insights get baked into model training.

Client Confidentiality Breaches Professional services firms—legal practices, accounting firms, consultancies—operate under strict confidentiality obligations. Using consumer AI to review client documents, even for “simple” tasks like summarization, potentially violates those professional duties.

Real California Cases: The Cost of Consumer AI Misuse

Orange County Law Firm ($850,000 Penalty) A mid-sized employment law firm discovered an associate had used ChatGPT to draft portions of settlement agreements for over 30 cases. When opposing counsel discovered references to specific case details in publicly accessible AI conversations, the firm faced:

- Professional liability claims from multiple clients

- California State Bar investigation

- $850,000 in settlements and legal fees

- Reputation damage that resulted in 40% client loss over six months

Los Angeles Healthcare Provider (Practice Closure) A dermatology practice allowed front-desk staff to use AI for appointment scheduling efficiency. Employees pasted patient names, contact information, and appointment reasons into ChatGPT to generate “professional” confirmation messages. A HIPAA complaint investigation revealed over 2,000 patient records had been exposed. The practice:

- Paid $275,000 in HIPAA fines

- Faced class-action lawsuit from affected patients

- Closed operations within 18 months due to reputation damage

San Diego Manufacturer (Lost Contract) A specialty manufacturing firm lost a $3.2 million defense contract when their proprietary coating process details appeared in a competitor’s patent application—traced back to an engineer using ChatGPT to “optimize the formula documentation.”

The AI Data Security Policy Every California Small Business Needs

Building Comprehensive AI Governance

California small businesses need structured AI data security policies that address both the opportunities and risks of generative AI. Here’s the framework:

1. AI Usage Classification System

Prohibited AI Uses:

- Processing customer personally identifiable information (PII)

- Analyzing financial records or payment data

- Reviewing medical/health information

- Handling proprietary product designs or formulas

- Processing legal documents or case information

- Analyzing employee performance or HR records

- Discussing competitive strategy or pricing

- Sharing vendor contracts or partnership agreements

Restricted AI Uses (Enterprise Tools Only):

- Customer communication drafting

- Marketing content generation

- Internal process documentation

- Meeting summarization

- Research synthesis

- Data analysis and reporting

Permitted General AI Uses:

- Learning new skills or concepts

- General industry research (publicly available info)

- Personal productivity (non-business content)

- Educational purposes

2. Technology Requirements

Mandatory Enterprise Features:

- Zero Data Retention: Conversations not used for model training

- Data Residency: Information stored in compliant jurisdictions (California/US)

- Access Controls: Role-based permissions and authentication

- Audit Trails: Complete logging of AI interactions

- Encryption: Data encrypted in transit and at rest

- Compliance Certifications: SOC 2, HIPAA BAA (if applicable), GDPR compliance

- SLA Guarantees: Uptime and support commitments

- Data Deletion: Verified data destruction capabilities

3. Employee Training Components

Required Training Topics:

- AI data classification recognition

- Consumer vs. enterprise AI platform differences

- Real-world breach case studies

- Secure AI workflow procedures

- Incident reporting protocols

- California privacy law basics (CPRA/CCPA)

- Industry-specific regulations (HIPAA, PCI-DSS, etc.)

Frequency: Initial onboarding plus quarterly refreshers

4. Incident Response Protocol

If Data Exposure Suspected:

- Immediate: Stop AI platform access

- Within 1 Hour: Notify IT security team

- Within 4 Hours: Document exposed data scope

- Within 24 Hours: Assess regulatory notification requirements

- Within 72 Hours: Complete breach notifications if required (CCPA/CPRA mandate)

5. Vendor Management

AI Platform Evaluation Checklist:

- ✓ Data Processing Agreement (DPA) provided

- ✓ Business Associate Agreement (if handling PHI)

- ✓ SOC 2 Type II certification current

- ✓ Data residency controls available

- ✓ Zero training data retention confirmed

- ✓ Penetration testing results shared

- ✓ Compliance attestations provided

- ✓ SLA includes security provisions

- ✓ Incident response plan documented

- ✓ Insurance coverage verified

Enterprise AI Environments: The Technical Solution

What Makes Enterprise AI Different

Enterprise-grade AI platforms provide architectural safeguards consumer tools can’t match:

Data Isolation Each business operates in a completely separate environment. Your data never touches another organization’s inputs or outputs. Think of it as the difference between a private office building versus a public library—conversations in one space never bleed into another.

Retention Controls Enterprise platforms offer:

- Zero Retention Mode: Conversations deleted immediately after session ends

- Time-Limited Storage: Data auto-deleted after 30/60/90 days

- On-Demand Deletion: Instant removal capabilities

- Verified Destruction: Cryptographic proof of data elimination

Training Data Exclusion The most critical difference: Enterprise agreements contractually prevent your business data from EVER being used to train or improve AI models. Your competitive intelligence stays yours.

Compliance Infrastructure Enterprise platforms provide:

- HIPAA Business Associate Agreements

- SOC 2 Type II certifications

- GDPR Data Processing Agreements

- FedRAMP authorization (for government contractors)

- PCI-DSS compliance documentation

- California-specific CPRA compliance tools

Access Governance

- Single Sign-On (SSO) integration with your existing identity management

- Role-based access controls (not everyone needs AI access)

- Multi-factor authentication requirements

- Session management and automatic timeouts

- Detailed audit logs of every AI interaction

Security Monitoring

- Real-time anomaly detection (unusual data access patterns)

- Data Loss Prevention (DLP) integration

- Sensitive data scanning (SSN, credit card, HIPAA identifiers)

- Automated alerts for policy violations

- Quarterly security reviews and penetration testing

Platform Comparison: Consumer vs. Enterprise AI

| Feature | Personal ChatGPT | Enterprise AI (Microsoft Azure OpenAI, AWS Bedrock, Google Vertex AI) |

| Data Training Use | May be used for model improvement | Contractually excluded from training |

| Data Retention | Indefinite (unless manually deleted) | Configurable: zero retention to time-limited |

| Encryption | In-transit only | In-transit and at-rest with customer-managed keys |

| Access Controls | Individual account-based | Enterprise SSO, role-based access, MFA |

| Compliance | No BAAs or DPAs | HIPAA BAA, SOC 2, GDPR DPA available |

| Data Residency | Global data centers | California/US data center selection |

| Audit Trails | Limited conversation history | Complete interaction logging with retention |

| Support SLA | Best-effort community support | 24/7 support with guaranteed response times |

| Cost Structure | $20/user/month | Variable: typically $30-60/user/month with volume discounts |

| Incident Response | User responsible | Vendor security team + customer support |

California Small Business AI Security: Specific Considerations

Why California Businesses Face Unique Pressure

Regulatory Environment California leads the nation in data privacy regulation. The CPRA (effective 2023) created the strongest consumer privacy protections in the United States:

- Expanded Personal Information Definition: Includes employee data, B2B contact information, and inferences drawn from data

- Strict Vendor Requirements: Businesses liable for third-party data processors’ violations

- Contractor/Service Provider Rules: AI platforms processing your data must have compliant contracts

- Automated Decision-Making Restrictions: AI systems making significant business decisions face disclosure requirements

Enforcement Reality The California Privacy Protection Agency (CPPA) has shown aggressive enforcement:

- Average 2024 fine: $580,000 per violation

- 340% increase in investigations year-over-year

- Focus on “technological” violations (AI, automated processing)

- No small business exemption for egregious violations

Professional Liability California professional services operate under heightened fiduciary duties:

- Legal Profession: State Bar Rule 1.6 (confidentiality) violations can result in disbarment

- Healthcare: Medical Board of California disciplines for HIPAA violations

- Financial Services: FINRA and SEC scrutiny for data handling

- Accounting: CPA Board sanctions for client data mismanagement

Industry-Specific AI Risks in California

Healthcare Practices California health providers face dual compliance: HIPAA (federal) and CMIA (California Confidentiality of Medical Information Act). CMIA is actually STRICTER than HIPAA in many areas. Using consumer AI with patient data violates both:

- Patient names, birthdates, diagnosis codes

- Treatment plans and medication lists

- Insurance information and billing records

- Clinical notes and care coordination communications

Legal Practices California Rules of Professional Conduct Rule 1.1 requires “competence” including understanding technology risks. Attorneys using consumer AI for:

- Case research (exposing client legal theories)

- Document drafting (revealing case facts)

- Strategy development (competitive intelligence loss) …may face competence and confidentiality violations simultaneously.

Financial Services California licensed financial advisors, CPAs, and insurance brokers handling:

- Portfolio holdings and asset values

- Tax returns and financial statements

- Estate planning documents

- Insurance underwriting information …through consumer AI risk securities violations, IRS Circular 230 breaches, and insurance commissioner sanctions.

Manufacturing & Technology California manufacturers and tech companies with:

- Proprietary formulas or source code

- Patent applications in progress

- Trade secret designs or processes

- Supply chain pricing strategies …face intellectual property theft risks when consumer AI ingests this data.

Implementing Enterprise AI: The Technijian Approach

Why DIY AI Security Fails for Small Businesses

Complexity Barrier Enterprise AI implementation requires expertise across:

- Cloud infrastructure (Azure, AWS, Google Cloud)

- Identity and access management

- Data classification and DLP

- Compliance frameworks

- Security monitoring

- Change management and user adoption

Most Orange County small businesses (5-150 employees) lack dedicated IT security teams with these specialized skills.

Configuration Risks Enterprise AI platforms offer hundreds of configuration options. Misconfiguration creates the very vulnerabilities you’re trying to avoid:

- Data retention accidentally enabled

- Overly permissive access controls

- Unencrypted data transmission

- Missing audit logging

- Improper data residency settings

Ongoing Maintenance AI security isn’t “set and forget”:

- Quarterly platform updates with new security features

- Emerging threat response (new attack vectors)

- Compliance requirement changes (regulatory updates)

- User behavior monitoring (detecting policy violations)

- Integration maintenance (as you add new business systems)

The Managed AI Security Model

Technijian’s enterprise AI implementation provides turnkey security:

Phase 1: Assessment & Planning (Week 1-2)

- Current AI usage audit (Shadow IT discovery)

- Data classification workshop

- Compliance requirements analysis

- Use case prioritization

- Risk assessment and mitigation planning

Phase 2: Platform Deployment (Week 3-4)

- Enterprise AI platform selection (Azure OpenAI, AWS Bedrock, or Google Vertex based on your needs)

- Secure configuration implementation

- Data residency verification (California/US data centers)

- Encryption key management setup

- Access control integration with existing Microsoft 365/Google Workspace

Phase 3: Policy & Training (Week 5-6)

- Customized AI usage policy development

- Employee training program delivery

- Department-specific use case guides

- Incident response procedure establishment

- Executive briefing on governance framework

Phase 4: Monitoring & Optimization (Ongoing)

- 24/7 security monitoring

- Quarterly compliance reviews

- Usage analytics and ROI reporting

- Policy refinement based on actual use patterns

- Threat intelligence updates

Real ROI: What California Businesses Gain

Risk Reduction Value

- Average CPRA Violation Fine Avoided: $580,000

- Professional Liability Insurance Premium Reduction: 15-25% with documented AI governance

- Breach Response Cost Avoidance: $250,000+ (average California small business breach cost)

Productivity Benefits

- Safe AI Adoption: Employees can use AI for appropriate tasks (30-40% time savings on qualified workflows)

- Reduced Legal Review: Clear policies eliminate constant “can I use AI for this?” questions

- Competitive Advantage: Faster, safer innovation cycles than competitors still using consumer tools

Compliance Efficiency

- Audit Preparedness: Documentation ready for regulatory requests

- Client Confidence: Enterprise AI becomes competitive differentiator in RFPs

- Insurance Benefits: Cyber insurance carriers offer premium reductions for documented AI governance

California AI Data Security Policy Template

For Your Legal and IT Teams

[COMPANY NAME] Enterprise AI Usage Policy – California Compliance Edition

Effective Date: [DATE]

Last Reviewed: [DATE]

Policy Owner: [IT Director/CTO]

Approved By: [CEO/Board]

- PURPOSE This policy establishes requirements for safe, compliant use of Artificial Intelligence (AI) and Large Language Model (LLM) technologies at [COMPANY NAME] in accordance with California privacy laws (CPRA, CCPA, CMIA), federal regulations (HIPAA, GLBA), and professional standards.

- SCOPE Applies to all employees, contractors, vendors, and third parties with access to [COMPANY NAME] data or systems.

- PROHIBITED ACTIVITIES The following actions are STRICTLY PROHIBITED and may result in immediate termination:

3.1. Using consumer AI platforms (ChatGPT personal accounts, Google Bard, Claude without enterprise agreement, etc.) to process:

- Customer personally identifiable information (PII)

- Financial records, payment data, or transaction information

- Protected health information (PHI) or medical records

- Legal documents, case files, or attorney-client communications

- Proprietary business information (formulas, designs, source code, strategies)

- Employee records or human resources information

- Confidential vendor or partner agreements

- Competitive intelligence or pricing strategies

3.2. Uploading [COMPANY NAME] documents to unapproved AI platforms 3.3. Sharing AI login credentials or allowing unauthorized AI access 3.4. Attempting to circumvent AI security controls or monitoring

- APPROVED AI PLATFORMS Only the following enterprise AI platforms are approved for business use:

- [Microsoft Azure OpenAI Service (via Technijian managed deployment)]

- [List other approved platforms]

Configuration Requirements: ✓ Zero training data retention enabled ✓ California data residency enforced ✓ Encryption in-transit and at-rest verified ✓ Audit logging enabled ✓ DLP integration active

- PERMITTED AI USE CASES Approved AI activities include:

- Marketing content creation (public-facing, non-confidential)

- Internal process documentation (publicly shareable information only)

- General research and learning

- Meeting notes summarization (after confidential information redaction)

- Email drafting (template-based, non-sensitive)

- EMPLOYEE RESPONSIBILITIES 6.1. Complete AI security training within 14 days of hire and annually thereafter 6.2. Report suspected AI policy violations within 24 hours to [security@company.com] 6.3. Immediately stop AI usage if data exposure suspected 6.4. Obtain written approval from [IT Director] before implementing new AI tools

- DATA CLASSIFICATION & AI COMPATIBILITY

| Data Classification | AI Processing Allowed? | Platform Requirements |

| Public | Yes | Any approved platform |

| Internal | Yes | Enterprise platform only |

| Confidential | Restricted | Enterprise platform with encryption, audit logging |

| Regulated (PHI, PII, Financial) | No | Prohibited except with explicit written authorization |

- VENDOR AI USAGE Third-party vendors processing [COMPANY NAME] data must: 8.1. Disclose all AI tool usage in vendor agreements 8.2. Provide Data Processing Agreements (DPAs) for AI platforms 8.3. Obtain written approval for AI processing before implementation 8.4. Submit to AI security audits upon request

- MONITORING & ENFORCEMENT 9.1. [COMPANY NAME] monitors AI platform usage for policy compliance 9.2. Violations may result in: verbal warning (first offense), written warning (second offense), termination (third offense or egregious violation) 9.3. Security team reviews AI audit logs quarterly 9.4. Annual policy effectiveness assessment required

- INCIDENT RESPONSE If data exposure through AI suspected:

- Immediately: Stop using affected AI platform

- Within 1 Hour: Email security@[company].com with details

- Within 4 Hours: Complete Incident Report Form

- DO NOT: Delete conversation history (evidence preservation)

- POLICY UPDATES This policy will be reviewed and updated:

- Quarterly (regulatory changes)

- After any AI-related security incident

- When new AI platforms deployed

- Upon employee feedback or compliance audit findings

- ACKNOWLEDGMENT I acknowledge receiving, reading, and understanding this AI Usage Policy. I agree to comply with all requirements and understand violations may result in disciplinary action up to and including termination.

Employee Signature: _________________ Date: _________

Print Name: _________________

How to Implement This Policy

Step 1: Executive Buy-In (Week 1)

- Present business case to leadership (risk reduction, compliance, competitive advantage)

- Obtain budget approval for enterprise AI platform

- Designate AI policy owner (usually IT Director or compliance officer)

Step 2: Technology Deployment (Week 2-4)

- Partner with Technijian for enterprise AI setup

- Configure security controls (encryption, audit logging, data residency)

- Integrate with existing systems (Microsoft 365, SSO, DLP)

- Test with pilot user group (5-10 employees)

Step 3: Policy Distribution (Week 5)

- Distribute policy via email with acknowledgment requirement

- Post to employee handbook and intranet

- Include in new hire onboarding materials

- Present at all-hands meeting

Step 4: Training Rollout (Week 6-8)

- Conduct department-specific training sessions

- Provide real-world examples relevant to each team

- Create quick-reference guides

- Offer Q&A office hours

Step 5: Ongoing Governance (Continuous)

- Monthly usage reports review

- Quarterly policy updates

- Annual compliance audits

- Incident response drills

Frequently Asked Questions: AI Data Security for California Businesses

General AI Security Questions

Q: What’s the actual risk if we keep using personal ChatGPT for our business?

A: The risks fall into three categories:

Legal/Regulatory: California CPRA violations carry fines up to $7,500 per consumer record. If you process customer data through consumer AI, you’re violating CPRA’s service provider requirements. A single incident exposing 100 customer records could result in $750,000 in fines.

Professional Liability: For licensed professionals (attorneys, doctors, CPAs, financial advisors), using consumer AI with client data violates professional conduct rules. This can lead to license suspension, malpractice claims, and professional liability insurance exclusions.

Competitive: Your business strategy, pricing, product roadmaps, and competitive intelligence become part of AI training data. Competitors using the same AI tools may receive outputs influenced by YOUR confidential information.

Q: Can’t we just tell employees to “be careful” about what they put in ChatGPT?

A: This approach fails 97% of the time based on data breach studies. Here’s why:

Judgment Failures: Employees constantly misjudge what’s “sensitive.” Example: An employee pastes “upcoming trade show schedule and booth numbers” thinking it’s harmless. That schedule reveals unannounced product launch timing—extremely valuable competitive intelligence.

Incremental Exposure: One employee asks AI to “summarize this client contract” (full text exposed). Another asks to “rewrite these meeting notes” (strategic discussion exposed). A third requests “make this price quote more professional” (pricing exposed). Individually, each seems small. Collectively, you’ve revealed your entire business model.

Shadow IT: Employees will use AI tools regardless of warnings—they’re too useful to ignore. Without approved alternatives, prohibition drives usage underground where you have zero visibility.

The only effective approach: Provide secure enterprise alternatives with clear policies about when/how to use them.

Q: How much does enterprise AI actually cost compared to personal ChatGPT?

A: Cost comparison for 20-employee Orange County business:

Consumer ChatGPT:

- 20 users × $20/month = $400/month = $4,800/year

- Hidden costs: Zero compliance features, no audit trails, unlimited liability exposure

Enterprise AI (Technijian Managed):

- Platform: $1,200-1,800/month ($60-90/user)

- Managed services: $800-1,200/month (monitoring, compliance, training, support)

- Total: ~$2,000-3,000/month = $24,000-36,000/year

Cost-Benefit Reality:

- Avoid ONE CPRA violation ($580,000 average) = 16-24 years of enterprise AI paid for

- Professional liability insurance reduction (15-20%) = $3,000-5,000/year savings

- Productivity gains from safe AI adoption = 30-40% time savings on qualified tasks ($50,000-75,000 value for 20 employees)

Net ROI: 250-400% first year when you factor in risk avoidance and productivity

Q: We’re a 10-person company. Isn’t this overkill for a business our size?

A: California regulations make NO size exemptions for data protection:

CPRA Enforcement: The California Privacy Protection Agency has fined businesses with 8-15 employees the SAME amounts as Fortune 500 companies for comparable violations. Your small size doesn’t reduce your liability—it often increases it since you lack legal teams to navigate incidents.

Professional Licensing: If you’re a small law firm, medical practice, or accounting firm, the State Bar, Medical Board, or CPA Board enforce the same confidentiality standards on solo practitioners as large firms. A 3-attorney firm faces the same sanctions as a 300-attorney firm for client data breaches.

Client Expectations: Your enterprise clients increasingly require vendor security assessments. Without documented AI governance, you’ll be excluded from RFPs. One lost $250,000 contract pays for 7-10 years of enterprise AI.

Insurance Requirements: Cyber insurance carriers now ask specific questions about AI usage during underwriting. “Do you have undocumented AI tools processing customer data?” If you answer yes, premiums increase 40-60%. If you answer no but actually do, claims can be denied.

Small businesses need protection MORE than large ones—you can’t absorb a $500,000 regulatory fine or lawsuit.

Technical Implementation Questions

Q: We already use Microsoft 365. Doesn’t that include secure AI?

A: Partially, but requires specific configuration:

Microsoft 365 Copilot: Includes enterprise data protection BUT only if:

- You have E3 or E5 licenses ($36-57/user/month, not basic Business licenses)

- Tenant-wide data governance policies are configured

- Sensitivity labels applied to documents

- Data Loss Prevention (DLP) rules active

What’s NOT protected in default Microsoft 365:

- Employees using personal microsoft.com/copilot (consumer version)

- Bing Chat Enterprise access (requires separate enablement)

- Teams messages unless retention policies set

- SharePoint files without sensitivity labels

Technijian’s Role: We audit your existing Microsoft 365 configuration, identify gaps, properly configure Copilot security, apply data classification, and create user policies. Most businesses think they’re “covered” with Microsoft 365 but have 8-12 critical security gaps in their actual configuration.

Q: What happens to our existing AI conversations when we switch to enterprise?

A: This requires careful migration:

Personal ChatGPT History:

- Export: Employees download conversation history (ChatGPT settings > “Export data”)

- Review: IT team reviews exports for exposed sensitive data

- Deletion: Employees delete ChatGPT accounts after export (prevents continued unauthorized use)

- Incident Assessment: If sensitive data found, determine breach notification requirements

Important: Account deletion doesn’t guarantee OpenAI removes your data from training sets. Data potentially included in models before deletion can’t be extracted. This is why the “switch” is critical NOW before further exposure.

Enterprise Platform Setup:

- Fresh deployment with clean baseline

- No historical consumer AI data transferred (prevents contamination)

- All future conversations in secured environment

Q: Can we just block ChatGPT at the firewall instead of deploying enterprise AI?

A: Blocking without alternatives creates worse problems:

What Happens When You Block:

- Employees use ChatGPT on personal phones (beyond your network visibility)

- Employees use mobile hotspots on work laptops (bypassing firewall)

- Employees use alternative AI tools you don’t even know exist (Claude, Gemini, Perplexity—same risks, less familiarity)

- Productivity drops as employees struggle with tasks AI solves in seconds

- Resentment builds toward IT team (“you took away our tools”)

The “Shadow IT” Result: Usage continues but completely invisible to you. You’ve transformed a “visible risk you can monitor” into an “invisible risk you can’t track.”

Better Approach:

- Deploy secure enterprise AI first

- Train employees on approved alternatives

- Give 30-day transition period

- THEN block consumer AI tools

- Monitor for shadow IT (Technijian provides this monitoring)

This gives employees viable alternatives so blocking doesn’t drive usage underground.

Compliance & Legal Questions

Q: If we have cyber insurance, aren’t we covered for AI-related breaches?

A: Most cyber insurance policies EXCLUDE AI exposures:

Common Exclusions:

- “Intentional data sharing with third parties” (employee putting data in ChatGPT viewed as intentional)

- “Authorized system access” (employee has permission to use ChatGPT, so breach isn’t “unauthorized”)

- “Technology not disclosed during underwriting” (you didn’t tell insurer about employee AI usage)

2024-2025 Policy Changes: Insurance carriers added specific AI questions to applications:

- “Do employees use generative AI tools for business purposes?”

- “Do you have written AI usage policies?”

- “Are AI platforms enterprise-grade with data protection agreements?”

Answer “No” to These Questions: Premiums increase 40-70%

Answer “Yes” Falsely: Claims denied during investigation

Answer “Yes” Truthfully with Documentation: Standard rates, full coverage

Technijian’s Insurance Documentation: We provide compliance packages insurers accept as proof of AI governance:

- AI usage policy (signed by employees)

- Enterprise platform contracts and DPAs

- Audit logs demonstrating monitoring

- Training completion records

- Incident response procedures

This documentation has helped Orange County clients reduce cyber insurance premiums by $8,000-15,000 annually.

Q: What specific California laws apply to AI usage?

A: Multiple overlapping regulations:

California Privacy Rights Act (CPRA) – Effective January 2023:

- Requires “reasonable security” for personal information

- Mandates service provider agreements for third parties processing data (AI platforms are service providers)

- Enforces data minimization (collecting only necessary data)

- Grants consumers right to know who processes their data

Violation: Using consumer AI with customer PII without proper contracts

Penalty: $2,500 per unintentional violation, $7,500 per intentional violation

California Consumer Privacy Act (CCPA) – Since 2020:

- Requires business purpose disclosures for data processing

- Mandates data deletion capabilities

- Enforces contractor oversight

Violation: Failing to ensure AI platform can delete consumer data on request

Penalty: $2,500-7,500 per consumer record

California Confidentiality of Medical Information Act (CMIA):

- Stricter than HIPAA in many areas

- Requires specific authorization for medical data disclosure

- Applies to ALL businesses handling California health information (not just healthcare providers)

Violation: Using ChatGPT to process employee health benefits questions

Penalty: $1,000 per violation (minimum), actual damages, attorney fees

Unfair Competition Law (UCL):

- Prohibits “unfair or fraudulent business practices”

- Interpreted to include inadequate data security

Violation: Data breach due to consumer AI usage deemed “unfair practice”

Penalty: Injunctions, restitution, attorney fees

Q: Do we need to tell customers we use AI?

A: California law requires disclosure in specific circumstances:

CPRA Disclosure Requirements: If AI processes customer personal information, your privacy policy must disclose:

- Categories of personal information collected

- Purposes for processing

- Third parties receiving data (AI platform vendor name)

- How customers can request deletion

Automated Decision-Making (CPRA Section 7004): If AI makes “significant decisions” affecting consumers (credit, employment, housing, insurance, pricing), you must:

- Disclose the use of automated decision-making

- Provide information about the logic involved

- Offer human review option

Example Scenarios:

Requires Disclosure:

- Using AI to generate personalized pricing based on customer history

- AI-powered chatbot making service eligibility determinations

- Automated credit decisions using AI analysis

No Disclosure Required:

- Using AI to draft generic marketing emails (no personal data processing)

- Internal AI usage for employee productivity (not customer-facing)

- AI generating creative content without personalized customer data

Technijian’s Compliance Support: We review your AI use cases and determine disclosure requirements, then draft appropriate privacy policy language and customer communications.

Industry-Specific Questions

Q: We’re a California law firm. Can we use ANY AI for legal work?

A: Yes, but with strict guardrails:

California State Bar Position (Based on Rule 1.1 Competence & Rule 1.6 Confidentiality):

- Attorneys must understand technology they use (including AI limitations, hallucinations, bias)

- Client confidential information requires “reasonable measures” to prevent disclosure

- Competence includes supervising AI outputs (fact-checking, source verification)

Permitted Legal AI Use:

- Legal research on public information (case law, statutes) using enterprise platforms

- Document drafting from templates using secure AI

- Brief writing with human attorney review of all AI-generated content

- Due diligence organization and summarization (if client data properly secured)

Prohibited Legal AI Use:

- Uploading client documents to consumer ChatGPT

- Using AI-generated citations without verification (recent sanctions for “hallucinated” cases)

- Relying on AI legal analysis without attorney review

- Processing privileged communications through unsecured AI

Required Safeguards:

- Enterprise AI platform with Business Associate Agreement

- Attorney review of ALL AI outputs before client delivery

- Citation verification (AI “hallucinates” nonexistent cases)

- Client informed consent for AI usage

- Malpractice insurance disclosure of AI usage

Technijian’s Legal-Specific AI Setup:

- Casetext CoCounsel or Harvey AI deployment (legal-specific enterprise platforms)

- Integration with practice management systems (Clio, MyCase)

- Conflict-free AI usage (opposing parties can’t access your queries)

- California State Bar compliance documentation

Q: We’re a medical practice. Is there ANY way to use AI with patient data?

A: Yes, with HIPAA-compliant enterprise AI:

HIPAA Requirements for AI:

- Business Associate Agreement (BAA) with AI vendor

- Encryption of PHI in transit and at rest

- Access controls and audit logging

- Data residency in compliant facilities

- Breach notification procedures

Consumer AI (ChatGPT, Gemini, etc.): NO BAA available = HIPAA violation per se

Enterprise AI with BAA:

- Microsoft Azure OpenAI (offers HIPAA BAA)

- AWS HealthScribe (built for medical documentation)

- Google Cloud Healthcare AI (HIPAA compliant configuration)

Permitted Medical AI Use Cases:

- Clinical note summarization (with BAA)

- Patient education material generation (de-identified)

- Appointment reminder drafting (with BAA)

- Medical coding assistance (with BAA and human verification)

- Insurance prior authorization letter drafting (with BAA)

Prohibited (Even with Enterprise AI):

- Diagnostic decision-making without physician oversight

- Treatment recommendations without clinical verification

- Patient communications without human review

- Data sharing with AI vendors for “model improvement”

California CMIA Adds Extra Requirements:

- Specific patient authorization for AI processing (beyond HIPAA consent)

- Minimum necessary standard (only essential patient data to AI)

- Accounting of disclosures (tracking which AI processed patient information)

Technijian’s Healthcare AI Implementation:

- HIPAA-compliant Azure OpenAI deployment

- Business Associate Agreements secured

- California Medical Board compliance documentation

- Integration with EHR systems (Epic, Cerner, athenahealth)

- Staff training on permitted vs. prohibited AI uses

- Audit logging for HIPAA accountability

Migration & Change Management Questions

Q: How do we get employees to actually follow the AI policy?

A: Policy success requires these elements:

- Make Compliance Easy:

- Deploy enterprise AI BEFORE prohibiting consumer tools

- Make enterprise AI as easy to access as ChatGPT (single sign-on, bookmarks, desktop shortcuts)

- Provide use-case specific guides (“How Marketing Can Use AI Safely”)

- Explain Real Stakes:

- Share actual California case studies in training (law firm, healthcare practice examples)

- Quantify personal risk (“Policy violation can result in termination, no unemployment eligibility”)

- Demonstrate business value (“Enterprise AI protects YOUR job by preventing company bankruptcy from fines”)

- Incentivize Adoption:

- Gamify training completion (certificates, team competitions)

- Recognize “AI champions” who find creative secure use cases

- Include AI policy compliance in performance reviews

- Make Violations Difficult:

- Block consumer AI at firewall (after enterprise deployed)

- Monitor for shadow IT (Technijian provides this)

- DLP alerts for sensitive data in unauthorized platforms

- Require approval for new tool requests (prevents workarounds)

- Create Feedback Loops:

- Monthly “AI office hours” for questions

- Anonymous violation reporting channel

- Quarterly policy updates based on employee input

- Executive modeling (leadership uses enterprise AI visibly)

Technijian’s Change Management Support:

- Custom training programs for each department

- “Lunch and learn” AI demonstration sessions

- Quick-reference guides and desk reminders

- Help desk for AI questions (reduces friction)

- Usage analytics showing team adoption progress

Q: What if employees resist because they like their personal ChatGPT?

A: Address the emotional/practical concerns:

Common Resistance Patterns:

“ChatGPT is better/faster/easier!” Response: “You’re right that consumer ChatGPT is optimized for ease of use. Our enterprise platform uses the exact same AI model—same capabilities—with added security. Let’s walk through your most common tasks and I’ll show you it’s equally fast.”

“I’ve been using it for months with no problems!” Response: “That’s exactly the danger. The ‘problem’ isn’t immediately visible. Your data is now part of OpenAI’s training dataset. The competitive damage happens when competitors query similar topics and get outputs influenced by YOUR inputs. It’s like carbon monoxide—odorless, colorless, deadly. Just because you don’t see harm doesn’t mean it’s safe.”

“This is corporate paranoia!” Response: “I respect that view. Let me share data: In 2024, 67% of California data breach lawsuits involved AI tools. Average settlement: $450,000. Our cyber insurance carrier increased premiums 45% specifically because we lack AI governance. This policy protects you personally from being the employee whose innocent ChatGPT use caused a lawsuit.”

“It’s not worth the hassle!” Response: “Agreed that change is frustrating. Here’s my commitment: If you find the enterprise platform slower or harder to use for your specific workflow, let me know within 30 days. We’ll either fix it or I’ll personally work with you to find an approved alternative. Fair?”

Technijian’s Adoption Psychology: We’ve deployed enterprise AI for 40+ Orange County businesses. Based on that experience:

- 15% of employees adopt immediately (early adopters)

- 70% adopt within 30 days with support (pragmatic majority)

- 15% resist until you enforce consequences (they’re testing whether policy is real)

Our change management process identifies the 15% resistors early and provides targeted intervention (one-on-one training, executive reinforcement) before enforcement becomes necessary.

How Technijian Can Help: AI Security Implementation for Orange County Businesses

Since 2000, Technijian has protected Southern California businesses through technology transitions—from cloud migration to cybersecurity modernization to now, AI governance. Our Orange County roots (headquartered in Irvine) mean we understand California’s unique regulatory environment and the practical realities of local business operations.

Technijian’s Comprehensive AI Security Services

AI Security Assessment (2-3 Weeks) Before implementing solutions, we need to understand your current state:

- Shadow IT Discovery: We identify ALL AI tools currently in use across your organization (employees often use 3-5 different AI platforms without IT knowledge)

- Data Flow Mapping: We trace exactly what business data flows through which systems and could be exposed to AI

- Compliance Gap Analysis: We assess your specific regulatory requirements (HIPAA, CPRA, professional licensing) and identify current violations

- Risk Quantification: We calculate your actual financial exposure from current AI practices (potential fines, breach costs, insurance impacts)

- Use Case Prioritization: We identify which business processes would benefit most from secure AI implementation

Deliverable: Comprehensive AI Security Assessment Report with specific recommendations, timeline, and ROI analysis

Enterprise AI Platform Deployment (3-6 Weeks) We implement your secure AI infrastructure:

Platform Selection & Configuration:

- Microsoft Azure OpenAI Service (ideal for Microsoft 365 organizations)

- AWS Bedrock (best for AWS cloud customers)

- Google Vertex AI (optimal for Google Workspace users)

- Specialized Platforms (legal-specific: Casetext CoCounsel; healthcare-specific: AWS HealthScribe)

Security Hardening:

- Zero training data retention enabled and verified

- California/US data center residency enforced

- Customer-managed encryption keys configured

- Multi-factor authentication required

- Role-based access controls (not every employee needs AI)

- Data Loss Prevention (DLP) integration

- Audit logging with 1-year retention

System Integration:

- Single Sign-On (SSO) with Microsoft 365 or Google Workspace

- Seamless access (employees log in once, AI ready)

- Microsoft Teams or Slack integration

- API connections to your business applications

Deliverable: Fully operational enterprise AI platform with documented security configuration

AI Governance Policy Development (1-2 Weeks) We create customized policies for your business:

- AI Usage Policy: What employees can/can’t do with AI (tailored to your industry)

- Data Classification Guide: Which data types are AI-compatible

- Incident Response Plan: What to do if data exposure suspected

- Vendor Management Requirements: For third parties using AI on your behalf

- Compliance Documentation: California-specific regulatory alignment

Deliverable: Complete AI governance policy package ready for employee distribution and regulatory review

Employee Training Program (2-4 Weeks) We ensure your team understands and adopts new AI policies:

Training Components:

- Executive briefing (business case, liability overview)

- Department-specific sessions (sales, marketing, operations, HR, finance—each has unique AI use cases)

- Hands-on platform demonstrations

- Real-world scenario practice

- Q&A and office hours

Training Materials:

- Video modules for remote workers

- Quick-reference guides (laminated desk cards)

- Use-case libraries (pre-approved AI prompts for common tasks)

- Self-paced eLearning modules

Deliverable: Trained workforce with documented completion records (for compliance auditing)

Ongoing Managed AI Security (Monthly Service) AI security isn’t “set and forget”—it requires continuous monitoring:

24/7 Security Monitoring:

- Real-time alerts for policy violations (e.g., attempt to upload sensitive file to unauthorized AI)

- Usage analytics (which teams use AI, for what purposes)

- Shadow IT detection (employees using unauthorized AI tools)

- Anomaly detection (unusual data access patterns)

Quarterly Compliance Reviews:

- Regulatory requirement updates (California passes new privacy laws regularly)

- Platform security patches and updates

- Policy effectiveness assessment

- User feedback integration

- Incident review and lessons learned

Executive Reporting:

- AI ROI metrics (productivity gains, risk reduction)

- Compliance status dashboard

- Usage trends and adoption rates

- Security incident summary

Helpdesk Support:

- “Can I use AI for this task?” guidance

- Platform troubleshooting

- Policy interpretation questions

- New use case evaluation

Deliverable: Continuous AI security with proactive risk management

Industry-Specific AI Solutions from Technijian

For Legal Practices:

- Casetext CoCounsel or Harvey AI deployment

- California State Bar compliance documentation

- Conflict-free AI usage (opposing parties cannot access your queries)

- Malpractice insurance coordination

- Legal-specific training (AI hallucination risks, citation verification)

For Healthcare Providers:

- HIPAA-compliant Azure OpenAI with Business Associate Agreement

- California Medical Board compliance package

- EHR integration (Epic, Cerner, athenahealth compatibility)

- Clinical workflow optimization (note summarization, coding assistance)

- CMIA-specific authorization templates

For Financial Services:

- SEC, FINRA, and California DFPI compliance alignment

- Client data protection (portfolio info, tax returns, financial plans)

- AI-powered client communication drafting

- Compliance review workflows

- Cyber insurance documentation

For Manufacturing & Technology:

- Trade secret and intellectual property protection

- Supply chain data security

- Product development AI workflows

- Engineering documentation AI assistance

- Patent application confidentiality

For Professional Services (Consulting, Accounting, Architecture, Engineering):

- Client confidentiality protection

- Proposal and SOW generation

- Project documentation automation

- Professional liability insurance compliance

- Multi-client data isolation

Technijian’s Unique Orange County Advantage

Local Expertise:

- 20+ years serving Southern California businesses

- Deep understanding of California regulatory environment

- Existing relationships with local compliance attorneys, CPAs, insurance brokers

- Same-day on-site support available throughout Orange County

Industry Specialization:

- Healthcare: 15+ medical/dental practices protected

- Legal: 8 law firms with enterprise AI

- Financial Services: 12 advisory firms and CPAs secured

- Manufacturing: 6 OC manufacturers with protected AI workflows

Proven Methodology:

- 40+ California businesses successfully transitioned from consumer to enterprise AI

- Zero security incidents post-implementation (100% clean track record)

- Average 6-week implementation timeline

- 95% employee adoption rate within 90 days

Comprehensive Technology Partnership: Beyond AI security, Technijian manages your complete IT infrastructure:

- Cybersecurity (network security, endpoint protection, vulnerability management)

- Cloud Services (Microsoft 365, Google Workspace, AWS, Azure)

- Compliance (HIPAA, CPRA, SOC 2, CMMC)

- Managed IT (help desk, monitoring, strategic planning)

This holistic approach means your AI security integrates seamlessly with broader technology strategy—not a disconnected one-off project.

Real Technijian Client Success Story: Orange County Law Firm

Challenge: A 12-attorney employment law firm discovered associates were using personal ChatGPT to draft settlement agreements, demand letters, and case strategy memos. After a potential conflict of interest emerged (opposing counsel mentioned specifics the firm never disclosed), managing partner realized AI exposure was the likely source.

Technijian’s Solution (4-Week Implementation):

Week 1: AI Security Assessment

- Surveyed all attorneys and staff about AI usage

- Discovered 9 of 12 attorneys used ChatGPT regularly

- Identified 250+ conversations containing privileged client information

- Quantified risk: Potential State Bar violation, malpractice exposure

Week 2: Platform Deployment

- Deployed Casetext CoCounsel (legal-specific enterprise AI)

- Configured California data residency

- Integrated with Clio practice management system

- Set up role-based access (associates, partners, staff different permissions)

Week 3: Policy & Training

- Created custom AI usage policy aligned with California Rules of Professional Conduct

- Trained all attorneys on: AI hallucination risks, citation verification, client confidentiality

- Developed “AI acceptable use” guides for common legal tasks

- Secured professional liability insurance documentation

Week 4: Monitoring & Enforcement

- Blocked consumer ChatGPT at firewall

- Implemented DLP to prevent case file uploads to unauthorized platforms

- Established monthly AI usage reviews

- Created incident response plan for potential data exposures

Results (12 Months Post-Implementation):

- Zero State Bar complaints or malpractice claims related to AI

- 40% productivity increase on document drafting (attorneys report)

- $18,000 annual savings on professional liability insurance (15% premium reduction for documented AI governance)

- 3 new client wins citing AI security as competitive differentiator in RFPs

- 100% attorney adoption of enterprise AI within 60 days

Partner Testimonial: “Technijian transformed AI from our biggest liability into our competitive advantage. We’re now the only employment law firm in Orange County with documented AI governance—clients specifically ask about it during intake. The investment paid for itself in the first year just from insurance savings, and the productivity gains are transformative.”

Getting Started: Your AI Security Roadmap with Technijian

Initial Consultation (Complimentary – 45 Minutes) Schedule a no-obligation assessment where we:

- Review your current AI usage patterns

- Identify immediate risk exposures

- Discuss California compliance requirements specific to your industry

- Outline potential solutions and timeline

- Provide ballpark investment estimate

No pressure, no sales pitch—just honest assessment of whether AI security is urgent for your business.

Pilot Program (Optional – 4 Weeks, Reduced Cost) For businesses uncertain about full commitment, we offer:

- Small team pilot (5-10 users)

- Limited scope enterprise AI deployment

- Basic training and policy framework

- Proof-of-concept to demonstrate value

- Option to expand to full deployment or terminate

This lets you experience the solution before full investment.

Full Implementation (6-12 Weeks) Complete AI security transformation:

- Comprehensive assessment and planning

- Enterprise platform deployment

- Policy development and distribution

- Organization-wide training

- Ongoing managed security

Investment Transparency: Typical Orange County small business (20-50 employees):

- Assessment: $3,500-5,500

- Platform Deployment: $8,000-15,000 (one-time)

- Policy & Training: $4,000-7,000 (one-time)

- Ongoing Managed Security: $1,500-3,000/month

Volume Discounts: 10+ employees qualify for reduced per-user rates

Financing Available: 12-36 month payment plans with approved credit

ROI Expectation: Most clients achieve 250-400% first-year ROI through risk avoidance, insurance savings, and productivity gains.

Take Action: Protect Your Business from AI Data Exposure

Every day you delay implementing enterprise AI security, your business data remains vulnerable. Consumer AI platforms continuously train on new inputs—meaning yesterday’s exposure becomes tomorrow’s permanent data contamination.

Don’t wait for a data breach, regulatory investigation, or competitive intelligence leak to force action.

Book Your AI Workflow & Security Demo with Technijian

What You’ll Experience:

- Live demonstration of enterprise AI platforms vs. consumer tools

- Personalized risk assessment for your specific business

- Use-case workshop showing how AI can safely transform your workflows

- Compliance roadmap for California regulatory requirements

- No-obligation proposal with specific timeline and investment

Demo Format:

- Duration: 60-90 minutes

- Location: Your office (throughout Orange County) or virtual via Teams/Zoom

- Attendees: Bring your leadership team, IT staff, compliance officer

- Preparation Required: None—we’ll guide you through everything

Schedule Your Demo: 📞 Call Technijian: (949)-379-8500

🌐 Online Scheduler: https://technijian.com/schedule-an-appointment/

📍 Visit Us: 18 Technology Dr, #141 Irvine, CA 92618

Mention “AI Data Security Blog” when booking to receive:

- Complimentary AI Security Assessment ($4,500 value)

- Extended demo with department-specific use cases

- Priority scheduling within 5 business days

Final Thoughts: The AI Security Decision That Defines Your Business Future

We’re at an inflection point in business technology. AI isn’t going away—it’s becoming fundamental to competitive operations across every industry. The question isn’t WHETHER to use AI, but HOW to use it safely.

Consumer AI platforms like personal ChatGPT were never designed for business data. They were built for individual creativity, learning, and convenience—not corporate data governance. Using them for business is like using a personal Gmail account for client communications instead of your company email: technically possible, but professionally irresponsible.

California’s regulatory environment reflects this reality. CPRA, CCPA, HIPAA, professional licensing boards—all now recognize AI as a significant data protection concern. Enforcement is accelerating, not slowing. Early adopters who implement AI governance now will thrive. Businesses that ignore these risks will face consequences ranging from regulatory fines to competitive obsolescence.

Technijian has guided Orange County businesses through every major technology transition of the past 20 years: Y2K preparation, cloud migration, mobile security, ransomware protection, and now AI governance. This transition is no different—early action minimizes disruption and maximizes opportunity.

The businesses that succeed in the AI era will be those that harness its power while protecting their data assets.

Don’t let your employees’ well-intentioned AI usage become your company’s biggest liability. Take control of AI in your organization—before AI exposes your organization.

Contact Technijian today to begin your AI security journey.

About Technijian

Technijian is Orange County’s premier managed IT services and cybersecurity provider, serving Southern California businesses since 2000. Founded by Ravi Jain and headquartered in Irvine, California, Technijian delivers comprehensive technology solutions including managed IT services, cybersecurity, cloud infrastructure, AI consulting, compliance services (HIPAA, CPRA, SOC 2), and digital marketing.

With 20+ years protecting local businesses, Technijian combines deep California regulatory expertise with cutting-edge technology solutions. Our client-first approach emphasizes education, transparency, and long-term partnerships—not just vendor relationships.

Serving industries including healthcare, legal, financial services, manufacturing, professional services, and technology throughout Orange County, Los Angeles County, and Southern California.