HIPAA-Compliant AI: How to Use Copilot, ChatGPT, and VDI Safely in Healthcare

🎙️ Dive Deeper with Our Podcast!

HIPAA-Compliant AI: Safe Healthcare Implementation Strategy

👉 Listen to the Episode: https://technijian.com/podcast/hipaa-compliant-ai-safe-healthcare-implementation-strategy/

Subscribe: Youtube | Spotify | Amazon

Introduction: The AI Dilemma Every Healthcare Organization Faces

Your clinical staff is already using AI. They’re asking ChatGPT to summarize patient notes, using Microsoft Copilot to draft correspondence, and leveraging AI tools to research treatment protocols. The productivity gains are undeniable—tasks that took hours now take minutes, documentation that was a burden becomes manageable, and information that was buried in countless sources becomes instantly accessible.

There’s just one problem: every single one of these AI interactions could be a HIPAA violation exposing your organization to massive fines, regulatory action, and reputational damage.

Healthcare organizations face an impossible choice. Ban AI tools entirely and watch your team find workarounds while falling behind competitors. Or allow AI usage and hope that no Protected Health Information (PHI) accidentally gets uploaded to systems that aren’t HIPAA-compliant, aren’t covered by Business Associate Agreements, and could expose patient data to unauthorized parties.

What if you could harness the transformative power of AI tools while maintaining bulletproof HIPAA compliance?

The answer lies in properly architected AI environments that combine Business Associate Agreements, Virtual Desktop Infrastructure, data loss prevention, and intelligent access controls to create a framework where AI enhances healthcare delivery without compromising patient privacy. This isn’t about choosing between innovation and compliance—it’s about implementing both correctly.

Why Standard AI Tools Create HIPAA Violations in Healthcare

Before exploring compliant solutions, let’s acknowledge the serious compliance risks that standard AI tool usage creates in healthcare settings.

The Fundamental HIPAA Problems with Consumer AI Tools

When healthcare staff uses consumer versions of ChatGPT, Claude, Copilot, or other AI tools with default configurations, they’re creating potential HIPAA violations with every interaction that involves patient information. The problems run deeper than most healthcare organizations realize.

Data transmission to unauthorized parties occurs the moment someone pastes patient information into a consumer AI interface. That data flows to servers operated by the AI provider, who becomes an unauthorized recipient of PHI unless a proper Business Associate Agreement is in place. Even if the information seems anonymized, the combination of clinical details, demographics, and context can often re-identify patients.

Training data contamination poses long-term risks. Many consumer AI tools use conversation data to improve their models. When patient information enters these training datasets, it could theoretically be reconstructed or referenced in responses to other users. Even if providers claim they don’t train on your data, proving this becomes difficult during a HIPAA audit.

Lack of access controls means there’s no audit trail showing who accessed what patient information through AI interactions. HIPAA requires detailed logging of all PHI access, but consumer AI tools don’t provide the granular access logs, user authentication integration, or audit capabilities that healthcare compliance demands.

Data persistence and retention create ongoing exposure. Conversations with AI tools may be stored indefinitely, backed up across multiple geographic regions, and retained long after the original clinical purpose has passed. HIPAA’s minimum necessary standard requires limiting data retention, but consumer AI tools operate with their own retention policies that rarely align with healthcare compliance requirements.

Cross-border data transfers complicate compliance when AI providers process data in multiple countries. Different privacy regulations, government access rights, and legal frameworks create scenarios where patient data might be subject to foreign jurisdiction, potentially violating HIPAA’s requirements around PHI handling.

The Risk Extends Beyond Direct PHI Entry

Healthcare organizations often assume the problem is limited to staff directly entering obvious patient identifiers into AI tools. The reality is far more nuanced and the risk extends to situations that seem innocuous.

Clinical context without identifiers can still constitute PHI. A physician asking an AI tool about treatment approaches for a rare condition in a specific age group with particular comorbidities may not mention a name, but the combination of factors could identify a patient in a small practice or community. HIPAA’s definition of PHI extends beyond obvious identifiers to any information that could reasonably be used to identify an individual.

Email and document processing through AI-powered features in Microsoft 365, Google Workspace, or other productivity tools can expose PHI when those tools scan content for suggestions, summaries, or improvements. That seemingly helpful feature that offers to summarize your inbox or suggest responses becomes a HIPAA concern when emails contain patient information.

Voice-to-text services and transcription tools that use AI frequently upload audio to cloud services for processing. When physicians dictate clinical notes, nurses record patient interactions, or staff transcribe medical histories using AI-powered transcription, they’re potentially transmitting PHI to services without appropriate safeguards.

AI-powered search and indexing features in enterprise tools may process and cache patient information as they analyze documents, emails, and files. Even if the AI tool itself seems compliant, the underlying search, indexing, and analysis functions could create unauthorized PHI disclosures.

The cumulative risk compounds when multiple staff members use AI tools inappropriately. Even if individual violations seem minor, the aggregate exposure across an organization creates substantial liability and demonstrates systemic compliance failures that regulators view harshly.

The Power of HIPAA-Compliant AI: Secure Innovation in Healthcare

What Makes AI Tools HIPAA-Compliant?

HIPAA-compliant AI implementation isn’t about avoiding AI entirely—it’s about architecting AI usage within a framework that satisfies regulatory requirements while preserving the productivity and innovation benefits that make these tools valuable.

Business Associate Agreements form the legal foundation. Any AI tool or service that will process PHI must sign a BAA accepting responsibility for protecting patient data and acknowledging their obligations under HIPAA. The BAA doesn’t make a tool compliant by itself, but it’s an absolute prerequisite. Microsoft, Google, and other major providers offer BAA-covered versions of their AI services for healthcare customers, but these often require enterprise agreements and specific configurations that differ from standard consumer offerings.

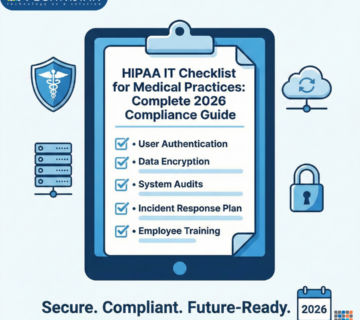

Technical safeguards must align with HIPAA’s Security Rule requirements. This means encryption in transit and at rest, strong authentication mechanisms, automatic session timeouts, role-based access controls, and comprehensive audit logging. AI tools deployed for healthcare use need to integrate with your existing identity management, honor access restrictions based on minimum necessary principles, and generate detailed logs showing who accessed what information when.

Administrative controls ensure that policies, training, and oversight mechanisms govern how AI tools are used. Even with technically compliant infrastructure, you need clear policies defining acceptable AI use cases, staff training on compliant AI usage, regular audits verifying policy adherence, and incident response procedures for addressing potential violations.

Physical safeguards address where data is processed and stored. HIPAA-compliant AI implementations need to specify which geographic regions will process data, ensure physical security at data center facilities, maintain control over backup and disaster recovery procedures, and provide documentation for compliance audits.

The Role of Virtual Desktop Infrastructure in Secure AI Usage

Virtual Desktop Infrastructure (VDI) provides one of the most effective approaches for enabling compliant AI tool usage in healthcare organizations. VDI creates a controlled environment where AI interactions happen within your security perimeter rather than on local devices that might be lost, stolen, or compromised.

Centralized control over AI tool access means your IT team can configure which AI services are available, ensure all AI interactions happen through compliant channels, enforce data loss prevention policies that prevent copying PHI to unauthorized tools, and rapidly update configurations across your entire organization. When a new AI tool becomes available with a proper BAA, you can enable it centrally. When a security concern arises, you can disable problematic tools instantly for all users.

Data never leaves your environment when properly configured VDI keeps PHI within your secure infrastructure while still enabling AI capabilities. Users can interact with AI tools that process queries on your servers or send appropriately sanitized requests to external services. The VDI layer acts as a policy enforcement point, inspecting data flows and blocking prohibited actions before data leaves your control.

Session recording and monitoring capabilities in VDI platforms enable comprehensive oversight of AI tool usage. Security teams can review sessions where AI tools were accessed, identify patterns suggesting inappropriate PHI disclosure, detect training needs where staff struggle with compliant AI usage, and generate evidence of compliance controls for auditors.

Device independence means clinical staff can access compliant AI tools from any endpoint—hospital workstations, home computers, tablets, or smartphones—while maintaining consistent security controls. The VDI session runs on secure servers in your data center or trusted cloud environment, so the endpoint device never directly handles PHI.

The Winning Approach: Implementing HIPAA-Compliant AI

Microsoft Copilot for Healthcare: Enterprise Implementation

Microsoft 365 Copilot represents one of the most widely adopted AI tools in healthcare, but most organizations fail to configure it properly for HIPAA compliance. The consumer or standard business version of Copilot doesn’t meet healthcare requirements, but Microsoft offers configurations specifically designed for regulated industries.

Microsoft 365 E5 or Business Premium licensing with appropriate compliance add-ons provides the foundation for HIPAA-compliant Copilot usage. These enterprise tiers include data loss prevention, advanced threat protection, information governance, and audit capabilities essential for healthcare compliance. The licensing enables privacy features that prevent Microsoft from using your data for model training.

Execution within your Microsoft 365 tenant’s security boundary ensures that Copilot processes your data within an environment covered by your BAA with Microsoft. When properly configured, Copilot for Microsoft 365 doesn’t send your content to external systems for training, respects your data residency requirements, honors your retention policies, and integrates with your sensitivity labels and access controls.

Data loss prevention policies can restrict what information Copilot can process. You can configure policies that prevent Copilot from accessing documents labeled as containing PHI unless specific conditions are met, block Copilot suggestions when sensitive patient identifiers are detected, require additional authentication for Copilot access to certain data types, and generate alerts when potential policy violations occur.

Sensitivity labels and information protection automatically classify content and enforce appropriate handling. When clinical documents are properly labeled, Copilot respects those classifications, applying appropriate security controls. Labels can trigger encryption, restrict sharing permissions, require specific authentication levels, and persist across your environment regardless of where files travel.

Audit logging provides the detailed access records HIPAA requires. Microsoft 365 compliance centers capture every Copilot interaction, showing which users accessed what content, what queries were submitted to AI services, what responses were generated, and whether any policy violations were detected. These logs become critical evidence during compliance audits or breach investigations.

ChatGPT Enterprise and Azure OpenAI for Healthcare

OpenAI’s ChatGPT captured global attention with its capabilities, but the standard ChatGPT interface violates HIPAA when used with patient information. Healthcare organizations need enterprise-grade OpenAI access deployed through compliant frameworks.

ChatGPT Enterprise offers a HIPAA-eligible version when properly configured and covered by appropriate agreements. This enterprise tier provides guaranteed data isolation where your conversations and data aren’t used to train OpenAI’s models, SOC 2 Type II compliance demonstrating appropriate security controls, administrative features for managing users and monitoring usage, and the ability to establish BAAs for healthcare usage.

Azure OpenAI Service provides an even more controlled approach by deploying OpenAI models within your Azure environment under your BAA with Microsoft. This means the AI models run in your Azure subscription within your chosen geographic region, you control all access policies and networking, data never leaves your Azure tenant, and you have complete visibility into usage through Azure monitoring tools.

API-based integration with proper guardrails enables embedding AI capabilities into your clinical applications securely. Rather than staff accessing ChatGPT directly, your applications can call Azure OpenAI APIs with PHI stripped or appropriately protected. The application layer can enforce business rules around acceptable AI usage, sanitize inputs before sending to AI services, validate outputs before displaying to users, and maintain audit trails linking AI interactions to clinical workflows.

Custom model fine-tuning on your clinical data becomes possible when using Azure OpenAI Service. You can train models on your historical patient notes, clinical guidelines, and institutional knowledge without that information ever leaving your environment. The resulting custom models understand your organization’s specific terminology, care protocols, and documentation patterns while remaining fully under your control.

Virtual Desktop Infrastructure: The Containment Strategy

VDI provides a powerful containment strategy for organizations wanting to enable multiple AI tools while maintaining comprehensive oversight and control. Modern VDI solutions from vendors like Citrix, VMware, Windows 365, or Amazon WorkSpaces create isolated environments where AI interactions can happen safely.

Application delivery through VDI means staff access AI tools running on centrally managed virtual machines rather than local installations. Your IT team maintains a gold image with properly configured AI tools, BAA-covered services with appropriate authentication, data loss prevention agents monitoring activity, and security policies enforced by the VDI platform. Users get the full functionality of AI tools while the technical controls happen transparently.

Network isolation keeps AI-related data flows within your controlled environment. VDI sessions can run in network segments with specific security policies, restrict which external services can be reached, force all internet traffic through your web filtering and DLP systems, and prevent direct connections from user devices to AI services. This network architecture makes it dramatically easier to monitor and control how data reaches external AI providers.

Clipboard and file transfer controls prevent users from copying PHI from your VDI environment to local devices where it could be pasted into non-compliant AI tools. These controls can block clipboard operations entirely, allow only plaintext clipboard data stripped of formatting, require approval for any file downloads, and log all data transfer attempts. Staff can still work productively, but attempts to exfiltrate PHI are blocked or flagged.

Persistent versus non-persistent VDI options affect how AI usage is tracked. Persistent VDI gives each user a dedicated virtual desktop that maintains state across sessions, making it easier to install user-specific tools but requiring more storage and management. Non-persistent VDI provisions fresh virtual desktops for each session from a master image, providing stronger security guarantees but potentially complicating AI tool configurations that rely on session history.

Implementing HIPAA-Compliant AI: A Strategic Approach

Step 1: Conduct an AI Usage Assessment and Risk Analysis

Before implementing any compliant AI infrastructure, you need to understand how AI tools are currently being used—or misused—across your organization. This discovery phase reveals risks and informs your compliance strategy.

Survey clinical and administrative staff about their AI tool usage. Create psychological safety for honest responses by emphasizing that the goal is enabling productive AI usage compliantly, not punishing current use. Ask which AI tools they use regularly, what tasks they accomplish with AI assistance, whether they’ve entered patient information into AI tools, and what productivity benefits they’ve experienced. The results often surprise leadership with the pervasiveness of unsanctioned AI usage.

Analyze network traffic and security logs to identify unauthorized AI service usage. Web filtering logs, firewall data, and cloud access security broker tools can reveal connections to ChatGPT, Claude, Gemini, and other AI services that may indicate policy violations. This technical analysis complements survey data and often uncovers usage that staff didn’t report or didn’t realize qualified as AI tool usage.

Document your current risk exposure by mapping where PHI might have been disclosed to non-compliant services. While you can’t perfectly reconstruct historical usage, understanding the scope and nature of potential violations informs your remediation strategy. This assessment also establishes your starting point for demonstrating improved compliance post-implementation.

Identify legitimate use cases where AI tools provide measurable value. Not every AI application makes sense for healthcare, and some applications carry higher risk than others. Prioritize use cases that deliver clear productivity gains, improve clinical decision-making, enhance patient experience, or reduce documentation burden while minimizing PHI exposure.

Step 2: Establish Legal and Contractual Foundations

HIPAA-compliant AI usage requires proper legal agreements with your technology vendors. These contracts establish the framework for shared responsibility and limit your liability when vendors handle PHI on your behalf.

Execute Business Associate Agreements with every vendor whose AI services will process PHI. This includes Microsoft for Copilot and Azure OpenAI, OpenAI if using ChatGPT Enterprise directly, VDI platform providers, any middleware or integration vendors, and cloud infrastructure providers. The BAA must specifically cover AI services and data processing, not just general technology services. Review BAA language carefully to ensure it addresses AI-specific concerns like model training, data retention, and subprocessor usage.

Document your data processing arrangements clearly. HIPAA requires you to know where PHI is processed, who has access, what safeguards are in place, and how data flows through various systems. Create data flow diagrams showing how patient information moves through your AI-enabled workflows. These diagrams become essential during audits and help identify potential gaps in your compliance program.

Negotiate appropriate service level agreements that address healthcare-specific requirements. SLAs should cover availability requirements for clinical workflows depending on AI, incident notification timelines when breaches or suspected breaches occur, audit rights allowing you to verify vendor compliance, and data retrieval procedures if you need to transition away from a vendor.

Review and update your Notice of Privacy Practices to inform patients that AI tools may be used in their care delivery and data processing. While you don’t need to describe every technical detail, transparency about AI usage builds trust and satisfies HIPAA’s notice requirements.

Step 3: Implement Technical Controls and Infrastructure

With legal foundations in place, you can deploy the technical infrastructure that makes compliant AI usage possible. This phase involves configuring systems, establishing policies, and integrating controls.

Deploy or configure VDI infrastructure if you’re using this containment approach. Size your VDI environment based on the number of users who need AI access, application performance requirements, and data processing location constraints. Implement high availability and disaster recovery capabilities ensuring clinical workflows depending on AI remain available. Configure network security policies controlling how VDI sessions access external AI services.

Enable and configure HIPAA-eligible AI services within your environment. For Microsoft Copilot, this means enabling compliance features in Microsoft 365, configuring sensitivity labels for PHI, implementing data loss prevention policies, and restricting Copilot access to appropriately licensed users. For Azure OpenAI, deploy services in your chosen Azure region, configure private endpoints eliminating public internet exposure, implement managed identities for secure authentication, and establish monitoring and alerting.

Integrate AI platforms with your identity and access management system. Single sign-on, multi-factor authentication, role-based access controls, and automatic deprovisioning when users leave all become critical for AI services just as they are for your EHR and other clinical systems. Configure conditional access policies that might require additional authentication for AI access from unusual locations or impose device compliance requirements.

Implement data loss prevention technologies that can identify PHI in text and prevent unauthorized disclosure. Modern DLP tools use AI themselves to recognize patterns indicating patient information, even when obvious identifiers are removed. Configure DLP policies to block copying PHI to unauthorized AI services, alert security teams to attempted policy violations, provide user education at the point of policy violation, and generate compliance reports for management review.

Deploy monitoring and audit logging systems capturing detailed AI usage information. Logs should track user identity, timestamp, AI service accessed, queries submitted, files processed, policy decisions applied, and any alerts generated. Retain these logs according to HIPAA requirements and ensure they’re available for security investigations and compliance audits.

Step 4: Develop Policies, Procedures, and Training

Technical controls alone don’t ensure compliance. Your workforce needs clear policies, practical procedures, and comprehensive training to use AI tools appropriately within the compliant framework you’ve created.

Create an AI Acceptable Use Policy specific to your healthcare organization. This policy should clearly define which AI tools are approved for use, what types of information can be processed with AI tools, prohibited activities that could violate HIPAA, authentication and access requirements, and consequences for policy violations. Make the policy practical and specific, using real-world examples that resonate with clinical and administrative staff.

Develop standard operating procedures for common AI use cases. Don’t just tell staff what not to do—show them how to accomplish legitimate tasks compliantly. Create documented procedures for using Copilot to draft clinical correspondence, leveraging AI for medical literature research, transcribing patient encounters with approved tools, and using AI to summarize lengthy documents. Clear procedures reduce confusion and increase adoption of compliant approaches.

Implement comprehensive training programs covering both policy and practical usage. Training should address HIPAA fundamentals and why AI creates specific risks, the technical controls your organization has implemented, how to access approved AI tools properly, recognizing PHI and understanding minimum necessary principles, and what to do if they accidentally disclose PHI through an AI tool. Make training engaging with realistic scenarios, hands-on practice with approved tools, and regular refreshers as tools and policies evolve.

Establish channels for staff to request new AI capabilities or report usability concerns with compliant tools. A successful AI compliance program balances control with enablement. When staff have a clear path to request approval for new AI tools or workflows, they’re less likely to implement unauthorized workarounds. This feedback loop also helps your compliance team stay ahead of emerging AI technologies.

Step 5: Monitor, Audit, and Continuously Improve

HIPAA compliance isn’t a one-time implementation—it’s an ongoing program requiring continuous monitoring, regular audits, and constant improvement as AI technologies and regulations evolve.

Establish regular monitoring of AI usage metrics and security indicators. Track which AI services are being used most heavily, identify users or departments with unusual AI usage patterns, monitor for policy violations or attempted violations, and analyze the types of queries or tasks staff are accomplishing with AI. This data informs resource allocation, training needs, and policy refinements.

Conduct periodic compliance audits specifically focused on AI usage. These audits should verify that only authorized AI services are being accessed, confirm that BAAs remain current and complete, test that technical controls are functioning as designed, review incident logs for potential PHI disclosures, and validate that training completion rates meet requirements. Document audit findings and remediation actions for regulatory examination.

Stay current with evolving AI capabilities and regulatory guidance. The AI landscape changes rapidly, with new tools, features, and compliance approaches emerging constantly. Subscribe to healthcare compliance and health IT security resources, monitor vendor announcements about AI service changes, follow OCR guidance on AI and HIPAA, and participate in healthcare IT security communities sharing AI compliance practices.

Foster a culture of compliance and continuous improvement where staff feel empowered to raise concerns and suggest enhancements. The most effective compliance programs treat frontline staff as partners rather than potential violators. Celebrate examples of staff using AI tools compliantly and productively, recognize employees who identify compliance risks or improvement opportunities, and maintain open communication about why AI compliance matters for patient privacy and organizational sustainability.

Step 6: Partner with Healthcare IT and Compliance Experts

Implementing HIPAA-compliant AI involves complex technical, legal, and operational challenges that few healthcare organizations can navigate alone. Partnering with experienced specialists like Technijian accelerates implementation and reduces risk.

Healthcare IT specialists understand the unique requirements and constraints of medical environments. They bring experience from multiple implementations, knowledge of which approaches work in different healthcare settings, established relationships with technology vendors, and practical wisdom about common pitfalls. This expertise typically delivers faster time-to-value and more robust solutions than attempting implementation with only internal resources.

Compliance consultants bridge the gap between technical capabilities and regulatory requirements. They can interpret evolving HIPAA guidance as it applies to AI, advise on defensible positions when regulations are ambiguous, help document your compliance program for auditors, and provide expert testimony if compliance issues arise. This specialized knowledge protects your organization from compliance missteps that could be expensive to remediate.

Ongoing managed services provide the continuous expertise many healthcare organizations need. AI compliance isn’t a project with a clear endpoint—it’s an evolving program requiring sustained attention. Managed services can monitor your AI infrastructure for security and compliance issues, manage vendor relationships and contract renewals, implement updates and patches to maintain security, and serve as an extension of your compliance team.

The Future of AI in Healthcare Compliance

AI capabilities in healthcare will continue advancing rapidly, bringing both opportunities and compliance challenges. Forward-thinking healthcare organizations are preparing for these emerging scenarios.

Ambient clinical documentation using AI that listens to patient encounters and automatically generates clinical notes promises to dramatically reduce documentation burden. As these tools mature and gain HIPAA-compliant deployment options, healthcare organizations will need frameworks for ensuring these continuous audio processing systems maintain privacy while improving clinician satisfaction.

Diagnostic AI and clinical decision support integrated directly into care delivery will become increasingly sophisticated. As AI moves from assisting with administrative tasks to actively participating in clinical decisions, compliance programs will need to address AI-generated insights becoming part of the medical record, liability considerations when AI influences treatment decisions, and patient consent for AI involvement in their care.

Federated learning and privacy-preserving AI techniques enable training AI models on sensitive healthcare data without centralizing that data in ways that create privacy risks. Healthcare organizations may participate in collaborative AI development while maintaining local control over PHI, benefiting from models trained on broader populations without compromising individual patient privacy.

Regulatory frameworks will evolve to address AI specifically. While current HIPAA regulations technically cover AI usage, expect more specific guidance and potentially new regulations addressing AI in healthcare. Forward-thinking organizations build flexible compliance programs that can adapt to regulatory evolution rather than rigid approaches tied to today’s requirements.

The key is establishing a compliance framework today that can incorporate emerging AI capabilities as they mature rather than reacting to each new AI tool independently. Organizations with mature AI governance programs will adopt beneficial innovations faster and more safely than those scrambling to retrofit compliance onto AI usage already embedded in clinical workflows.

Conclusion: AI Innovation Without HIPAA Compromise

HIPAA-compliant AI usage in healthcare isn’t just possible—it’s essential for organizations that want to remain competitive while protecting patient privacy and avoiding regulatory sanctions. The choice between AI innovation and compliance is a false dilemma created by improper implementation, not an inherent limitation of the technology.

The difference between healthcare organizations that successfully leverage AI and those that face compliance incidents often comes down to architecture, agreements, and oversight. When you establish the proper legal foundations with BAAs, deploy AI capabilities within secure technical frameworks like VDI or appropriately configured cloud services, implement comprehensive monitoring and controls, and train your workforce on compliant usage, you create an environment where AI enhances healthcare delivery without compromising patient trust.

That’s where Technijian excels.

Ready to enable your healthcare organization to use AI tools safely and compliantly? Technijian’s team of healthcare IT specialists combines deep HIPAA compliance expertise with practical implementation experience to build AI environments that work for healthcare, not against it.

Frequently Asked Questions (FAQ)

What is a Business Associate Agreement and why do I need one for AI tools?

A Business Associate Agreement (BAA) is a legally required contract between a HIPAA-covered entity and any vendor or service provider who will have access to Protected Health Information. The BAA makes the vendor a “business associate” under HIPAA, accepting legal responsibility for protecting patient data and following HIPAA requirements. For AI tools, you absolutely need a BAA if the AI service will process any patient information, because without a BAA, sharing PHI with the AI provider is an unauthorized disclosure violating HIPAA. Consumer versions of ChatGPT, Claude, and similar tools don’t offer BAAs and therefore can’t legally be used with patient information. Enterprise versions from Microsoft, Google, OpenAI, and other providers do offer BAAs for healthcare customers, but you must ensure the BAA is signed and covers the specific AI services you’re using before processing any PHI.

Can we just anonymize patient data before using it with AI tools?

Anonymization sounds simple but is extremely difficult to achieve reliably. HIPAA defines 18 specific identifiers that must be removed to create “de-identified” health information, including names, dates, addresses, medical record numbers, and even ZIP codes. However, true anonymization goes beyond removing obvious identifiers—you must ensure the remaining information couldn’t be used to re-identify individuals, which becomes nearly impossible when detailed clinical information is involved. The combination of age range, diagnosis, treatment history, and other clinical details can often identify patients even without explicit names or numbers. Additionally, if you retain any way to link the de-identified data back to specific patients, the information isn’t truly anonymized under HIPAA. For most healthcare AI use cases, proper anonymization is impractical, making compliant AI tools with BAAs the more reliable approach.

Is Microsoft Copilot automatically HIPAA-compliant if we have Microsoft 365?

No. Having Microsoft 365 doesn’t automatically make Copilot HIPAA-compliant for healthcare use. You need specific licensing (typically E5 or equivalent), a signed BAA with Microsoft that covers AI services, proper configuration of compliance features including data loss prevention and information protection, appropriate geographic data processing settings, and correctly implemented audit logging and monitoring. The consumer or basic business versions of Copilot don’t meet HIPAA requirements even if you have a Microsoft 365 subscription. Healthcare organizations must work with Microsoft or qualified partners to configure Copilot for HIPAA-eligible use, enable the right compliance features, and ensure their environment maintains the necessary controls. It’s also critical that staff understand which Microsoft AI services are covered by your BAA and which aren’t, as Microsoft offers various AI tools with different compliance postures.

What is Virtual Desktop Infrastructure and why does it help with AI compliance?

Virtual Desktop Infrastructure (VDI) provides virtual desktops that run on centralized servers while users access them from various endpoint devices. For AI compliance, VDI is valuable because it creates a controlled environment where you can tightly manage which AI tools are available, enforce policies preventing unauthorized AI usage, monitor all AI interactions through centralized logging, prevent data from leaving your secure environment, and rapidly update configurations across all users. When staff access AI tools through VDI, their actual devices never directly handle PHI—everything happens within the secure VDI session running in your data center or secure cloud environment. VDI acts as a policy enforcement layer, allowing you to block clipboard operations that could copy PHI to unauthorized tools, restrict file downloads that could exfiltrate data, and monitor activity for compliance verification. Many healthcare organizations find VDI provides the right balance of security control and user productivity for enabling AI usage.

How much does HIPAA-compliant AI implementation cost?

Costs vary significantly based on your organization’s size, existing infrastructure, and the scope of AI services you’re implementing. Small practices might spend $10,000-$30,000 for initial setup including licensing, configuration, training, and compliance consultation. Mid-sized healthcare organizations typically invest $50,000-$150,000 for comprehensive implementations covering multiple AI tools, VDI infrastructure, and ongoing management. Large health systems with complex needs may invest several hundred thousand dollars for enterprise-wide AI enablement. Ongoing costs include licensing for enterprise AI services ($20-$60 per user per month), VDI infrastructure and management, compliance monitoring and auditing, and regular training programs. However, most healthcare organizations find ROI from AI tools justifies these investments through productivity gains, reduced documentation time, improved clinical decision support, and fewer compliance incidents. Technijian provides transparent pricing after understanding your specific environment, existing infrastructure, and AI usage goals during the discovery phase.

What happens if someone accidentally enters PHI into an unauthorized AI tool?

If PHI is disclosed to an unauthorized AI service, you potentially have a HIPAA breach that must be evaluated and possibly reported. First, immediately document the incident including what information was disclosed, which AI service received it, who made the disclosure, and when it occurred. Contain the situation by having the user stop using the tool and, if possible, delete the conversation history (though this doesn’t guarantee data deletion from the vendor’s systems). Conduct a risk assessment evaluating the likelihood that the PHI could be accessed or used inappropriately, the nature and extent of information disclosed, and whether the disclosure poses significant risk of harm to the patient. If the risk assessment determines there’s a more than low probability of compromise, you may need to notify affected patients, HHS, and potentially media depending on the number of individuals affected. Use the incident as a training opportunity, not just punishment, to reinforce proper AI usage policies. This scenario demonstrates why implementing compliant AI infrastructure before usage becomes widespread is so critical.

Can we use AI tools for research without HIPAA concerns?

Using AI tools for medical research still involves HIPAA compliance requirements if you’re working with PHI. Research use of patient information requires either proper authorization from patients, IRB-approved waiver of authorization, or using properly de-identified data. If your research involves identifiable patient information, you need the same HIPAA-compliant AI infrastructure and BAAs that clinical operations require. However, if you’re working with truly de-identified data that cannot be linked back to individuals, HIPAA requirements don’t apply and you have more flexibility in tool selection. Many researchers use AI tools for literature review, hypothesis generation, statistical analysis planning, and writing assistance without HIPAA concerns because these activities don’t involve patient data. The key is carefully evaluating whether any specific AI usage involves PHI and applying appropriate safeguards when it does.

Do patients have the right to know if AI was used in their care?

This is an evolving area of healthcare law and ethics. Currently, HIPAA doesn’t specifically require disclosing AI usage in care delivery, but general medical ethics principles of informed consent and transparency suggest patients should be informed when AI plays a significant role in diagnosis or treatment decisions. Many healthcare organizations are updating their Notice of Privacy Practices to disclose that AI tools may be used for documentation, care coordination, and clinical decision support. Patient attitudes toward AI in healthcare vary widely—some patients appreciate AI’s potential to improve accuracy and efficiency, while others have concerns about privacy or prefer human-only care. Proactive transparency about AI usage, including how patient privacy is protected, generally builds trust more effectively than discovering AI involvement after the fact. Expect regulatory guidance in this area to evolve as AI becomes more prevalent in healthcare delivery.

How does Technijian help healthcare organizations implement compliant AI?

Technijian provides comprehensive support for healthcare organizations navigating AI implementation while maintaining HIPAA compliance. Our services begin with thorough assessment of your current AI usage (authorized and unauthorized), existing infrastructure and how it can support compliant AI, priority use cases where AI delivers the most value, and your compliance program maturity. We then design and implement technical solutions including VDI infrastructure for secure AI access, BAA-covered AI services properly configured for healthcare, data loss prevention and monitoring systems, and integration with your existing identity management and security tools. Beyond technical implementation, Technijian provides policy development for acceptable AI usage, comprehensive staff training on compliant AI practices, ongoing monitoring and compliance support, and regular updates as AI capabilities and regulations evolve. Think of Technijian as your extended healthcare IT and compliance team, bringing specialized expertise that most healthcare organizations don’t maintain in-house while allowing your internal staff to focus on patient care rather than technical AI implementation.

What’s the difference between ChatGPT, ChatGPT Enterprise, and Azure OpenAI Service?

These represent three different deployment models for OpenAI’s language models with very different compliance implications. ChatGPT is the consumer-facing web interface at chat.openai.com—it’s free or low-cost, easy to use, but isn’t HIPAA-compliant and can’t be used with patient information because it lacks BAA coverage and data isolation. ChatGPT Enterprise is OpenAI’s business tier that offers enhanced privacy, administrative controls, and the ability to establish BAAs, making it potentially suitable for healthcare with proper configuration. Azure OpenAI Service deploys OpenAI’s models within your Microsoft Azure environment under your existing BAA with Microsoft, providing the highest level of control and compliance assurance because the AI processing happens entirely within your Azure subscription. For healthcare organizations already using Microsoft Azure, Azure OpenAI Service typically provides the most straightforward path to compliant AI usage because it leverages your existing Azure security controls, networking, and compliance framework. Technijian helps healthcare organizations evaluate which deployment model best fits their technical environment, compliance requirements, and use cases.

Take the Next Step with Technijian

Don’t let HIPAA compliance concerns prevent your healthcare organization from leveraging AI’s transformative potential. Technijian’s healthcare IT and compliance expertise can build AI environments that enable innovation while ensuring bulletproof patient privacy protection.

Contact Technijian today to:

Schedule a free AI compliance assessment for your healthcare organization See demonstrations of HIPAA-compliant AI implementations Discuss your specific AI use cases and compliance challenges Get a customized roadmap for secure AI deployment

Ready to empower your clinicians and staff with AI tools they can use confidently? Technijian makes HIPAA-compliant AI accessible, practical, and aligned with your patient care mission.

About Technijian

Technijian is a premier Managed IT Services provider AI-powered workflow automation solutions, specializing in connecting enterprise communication and support platforms. With deep expertise in Microsoft Teams, 3CX, and major helpdesk systems, Technijian helps businesses transform fragmented tech stacks into cohesive, intelligent ecosystems that drive efficiency, improve customer satisfaction, and support scalable growth.

Specializing in delivering secure, scalable, and innovative AI and technology solutions across Orange County and Southern California. Founded in 2000 by Ravi Jain, what started as a one-man IT shop has evolved into a trusted technology partner with teams of engineers, AI specialists, and cybersecurity professionals both in the U.S. and internationally.

Headquartered in Irvine, we provide comprehensive cybersecurity solutions, IT support, AI implementation services, and cloud services throughout Orange County—from Aliso Viejo, Anaheim, Costa Mesa, and Fountain Valley to Newport Beach, Santa Ana, Tustin, and beyond. Our extensive experience with enterprise security deployments, combined with our deep understanding of local business needs, makes us the ideal partner for organizations seeking to implement security solutions that provide real protection.

We work closely with clients across diverse industries including healthcare, finance, law, retail, and professional services to design security strategies that reduce risk, enhance productivity, and maintain the highest protection standards. Our Irvine-based office remains our primary hub, delivering the personalized service and responsive support that businesses across Orange County have relied on for over two decades.

With expertise spanning cybersecurity, managed IT services, AI implementation, consulting, and cloud solutions, Technijian has become the go-to partner for small to medium businesses seeking reliable technology infrastructure and comprehensive security capabilities. Whether you need Cisco Umbrella deployment in Irvine, DNS security implementation in Santa Ana, or phishing prevention consulting in Anaheim, we deliver technology solutions that align with your business goals and security requirements.

Partner with Technijian and experience the difference of a local IT company that combines global security expertise with community-driven service. Our mission is to help businesses across Irvine, Orange County, and Southern California harness the power of advanced cybersecurity to stay protected, efficient, and competitive in today’s threat-filled digital world.